In the world of modern software development and operations, Linux stands as the undisputed foundation. From the smallest startup deploying a single web application to the largest enterprise orchestrating thousands of microservices, the power, flexibility, and open-source nature of Linux make it the operating system of choice. For any aspiring or practicing DevOps professional, a deep, practical understanding of Linux isn’t just a desirable skill—it’s an absolute necessity. It is the canvas upon which the entire DevOps toolchain is painted.

This comprehensive guide will take you on a journey from the command line to the cloud. We will explore how core Linux principles are the bedrock of DevOps practices, starting with mastering the terminal and shell scripting. We’ll then scale up with powerful automation tools like Ansible, dive into the transformative world of containerization with Docker, and finally, learn how to manage it all at scale using Kubernetes. Whether you’re working with an Ubuntu Tutorial or managing a fleet of Red Hat Linux servers, these concepts are universally applicable and essential for building robust, scalable, and efficient systems.

The Bedrock: Mastering the Linux Terminal and Bash Scripting

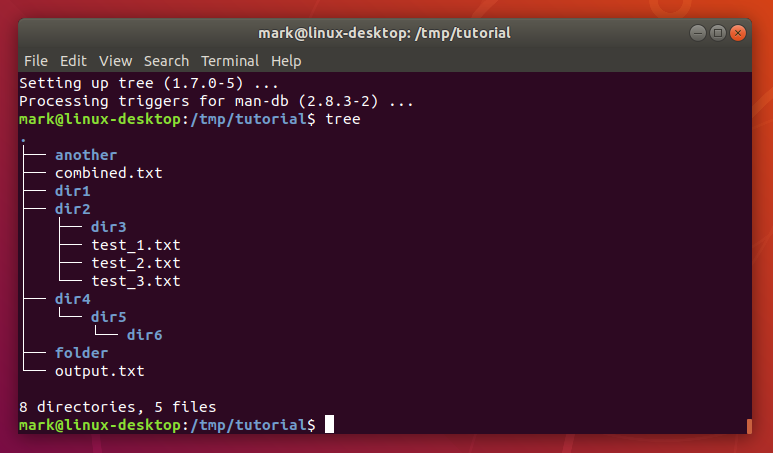

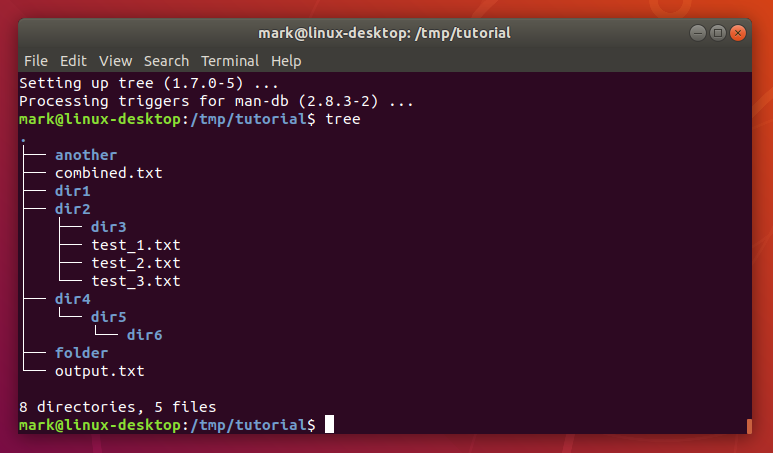

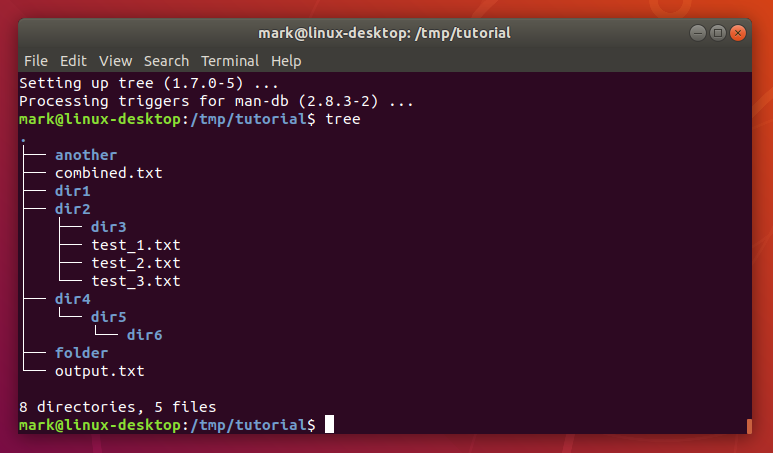

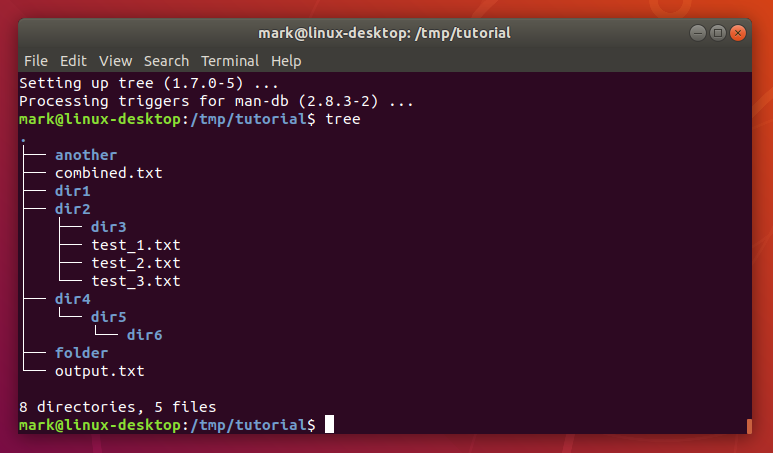

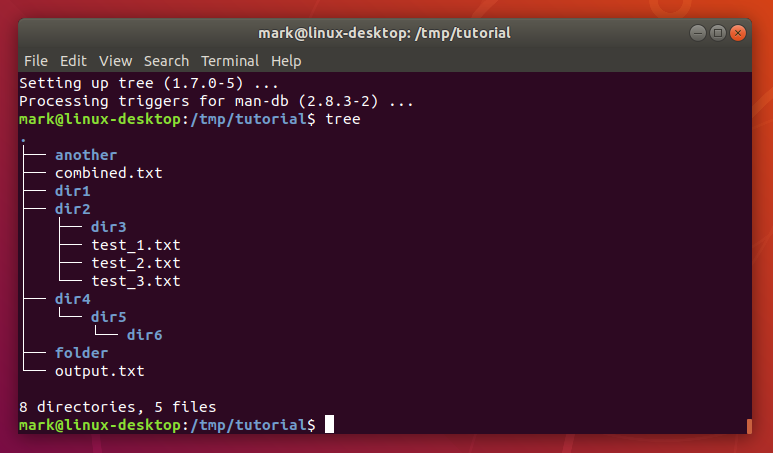

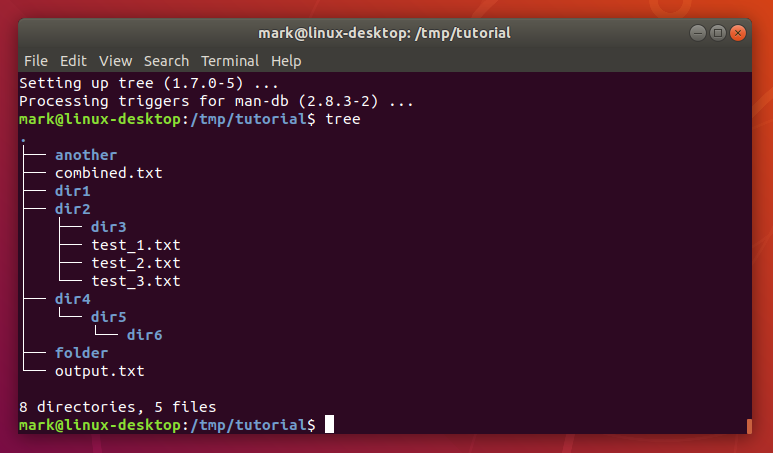

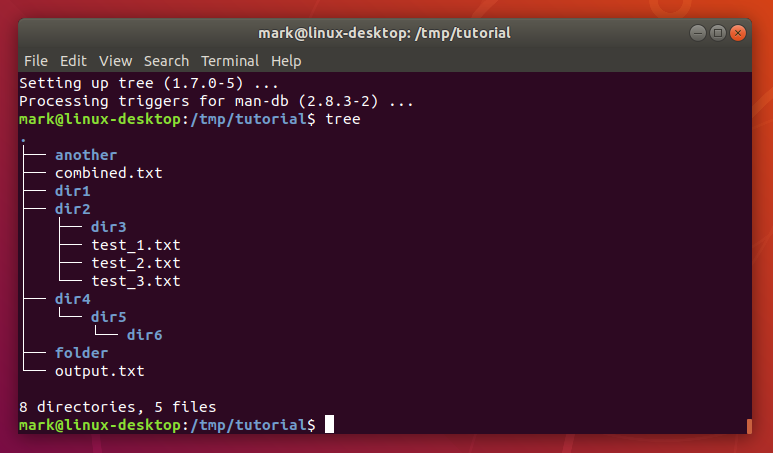

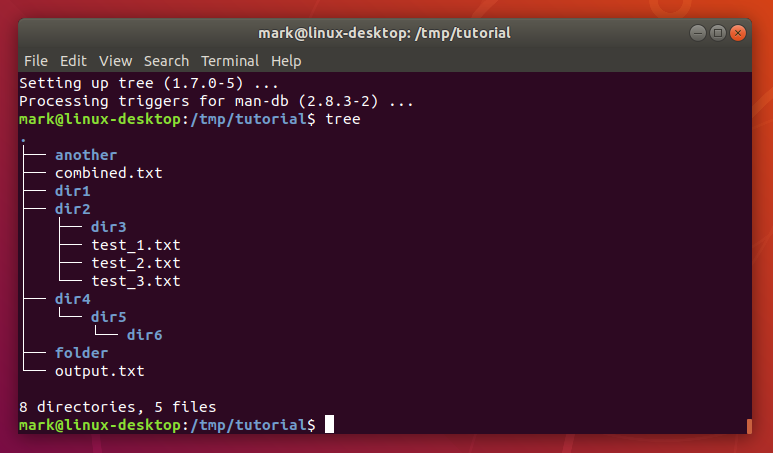

Before diving into complex automation and orchestration, every DevOps journey begins at the Linux Terminal. The command-line interface (CLI) is the most powerful and efficient way to interact with a Linux Server. It provides direct, unfiltered access to the system, enabling precise control and forming the basis for all automation.

Essential Linux Commands for DevOps

Beyond basic navigation, a DevOps professional’s toolkit must include commands for text manipulation, process inspection, and network diagnostics. Tools like grep, sed, and awk are indispensable for parsing logs and configuration files. For System Monitoring, commands like ps, top, and the more user-friendly htop provide real-time insights into system performance. Network utilities such as curl, wget, ss, and netstat are crucial for troubleshooting connectivity and interacting with APIs, a common task in microservice architectures.

Practical Bash Scripting for Automation

The true power of the command line is unlocked through Shell Scripting. Bash Scripting allows you to chain commands together, use variables, loops, and conditional logic to automate repetitive tasks. This is the first step in the Linux Automation ladder. A simple script can check the health of a web service, perform a daily Linux Backup, or clean up old log files. For example, here is a simple Bash script that checks if a web server is responding correctly and logs the status.

#!/bin/bash

# A simple script to monitor a website's health

# Configuration

SITE_URL="http://your-website.com"

LOG_FILE="/var/log/website_health.log"

TIMESTAMP=$(date +"%Y-%m-%d %T")

# Use curl to get the HTTP status code

HTTP_STATUS=$(curl -o /dev/null -s -w "%{http_code}" ${SITE_URL})

# Check the status and log the result

if [ "$HTTP_STATUS" -eq 200 ]; then

echo "[$TIMESTAMP] SUCCESS: Site ${SITE_URL} is up. Status code: ${HTTP_STATUS}" >> ${LOG_FILE}

else

echo "[$TIMESTAMP] ERROR: Site ${SITE_URL} is down or returning an error. Status code: ${HTTP_STATUS}" >> ${LOG_FILE}

# Optional: Add logic here to send an alert (e.g., email)

fi

exit 0This script demonstrates the core principles of automation: defining a task, executing it programmatically, and making decisions based on the outcome. This is a fundamental skill in Linux Administration and DevOps.

Scaling Operations: Configuration Management and Automation

As infrastructure grows from one server to hundreds, manual management becomes impossible. This is where the DevOps philosophy of “pets vs. cattle” comes into play. Servers should be treated as disposable, identical units (cattle), not as unique, hand-reared systems (pets). This requires robust automation and configuration management tools to ensure consistency and repeatability.

Linux terminal command line – Computer Icons Command-line interface Computer terminal, linux …

Introduction to Ansible

Ansible is a leading configuration management tool that perfectly embodies this philosophy. Its key advantages are its agentless architecture (it communicates over standard Linux SSH) and its use of YAML, a simple, human-readable language. With Ansible, you define the desired state of your systems in “playbooks,” and Ansible ensures all your servers match that state. This is a cornerstone of modern Linux DevOps, allowing you to manage fleets of servers running distributions like CentOS, Debian Linux, or Ubuntu with ease.

A Practical Ansible Playbook

An Ansible playbook is a YAML file that contains a set of “plays,” each targeting a group of hosts and running a series of “tasks.” Tasks call Ansible modules to perform actions, such as installing a package, copying a file, or starting a service. The following playbook automates the installation and configuration of an Nginx web server.

---

- name: Configure Nginx Web Server

hosts: webservers

become: yes # Execute tasks with sudo privileges

tasks:

- name: Install Nginx

ansible.builtin.apt:

name: nginx

state: present

update_cache: yes

when: ansible_os_family == "Debian"

- name: Install Nginx on Red Hat family

ansible.builtin.yum:

name: nginx

state: present

when: ansible_os_family == "RedHat"

- name: Copy Nginx configuration file

ansible.builtin.template:

src: ./nginx.conf.j2 # A template file

dest: /etc/nginx/sites-available/default

- name: Ensure Nginx is running and enabled on boot

ansible.builtin.service:

name: nginx

state: started

enabled: yesThis playbook is idempotent, meaning it can be run multiple times without causing unintended side effects. If Nginx is already installed and running, Ansible will simply report that the state is already correct. This predictability is vital for reliable Linux Automation.

The Modern Paradigm: Containers and Orchestration on Linux

While configuration management automates the setup of servers, containerization revolutionizes how we package and run applications. Containers provide lightweight, isolated environments that bundle an application with all its dependencies, ensuring it runs consistently everywhere.

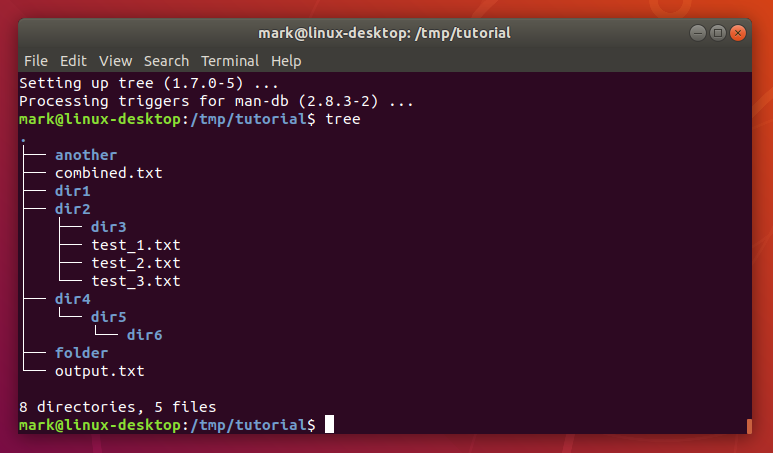

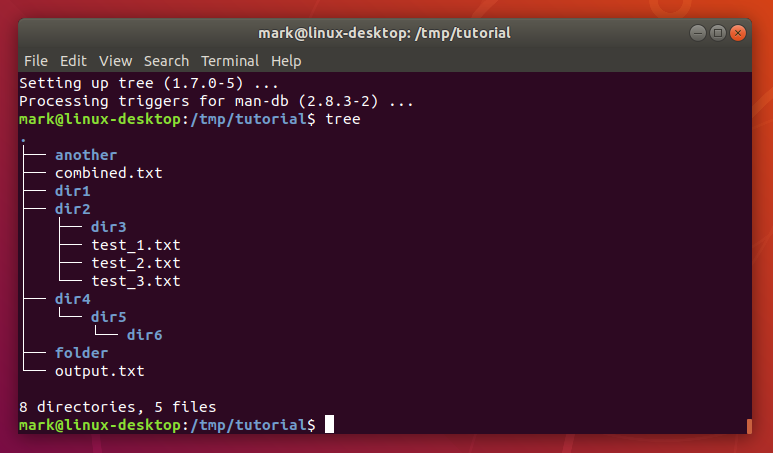

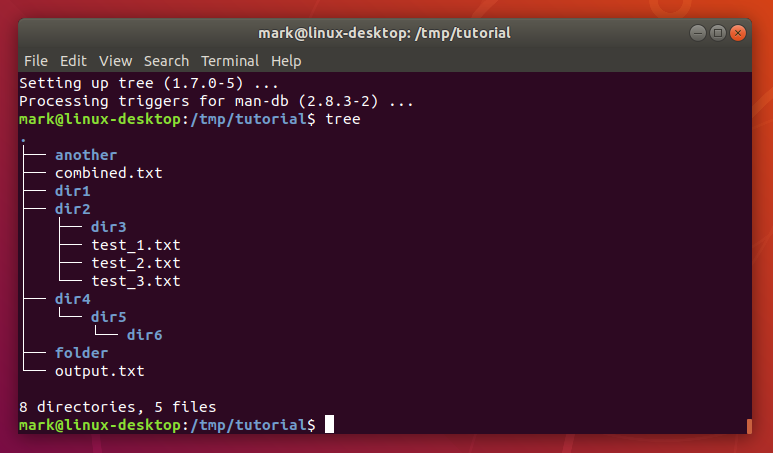

From VMs to Containers: The Docker Revolution

Linux Docker has become synonymous with containerization. Unlike virtual machines, which virtualize an entire operating system, Docker containers share the host system’s Linux Kernel. They leverage kernel features like cgroups (for resource limiting) and namespaces (for isolation) to create sandboxed environments. This makes them incredibly fast to start and significantly more resource-efficient. This is the essence of a Container Linux environment.

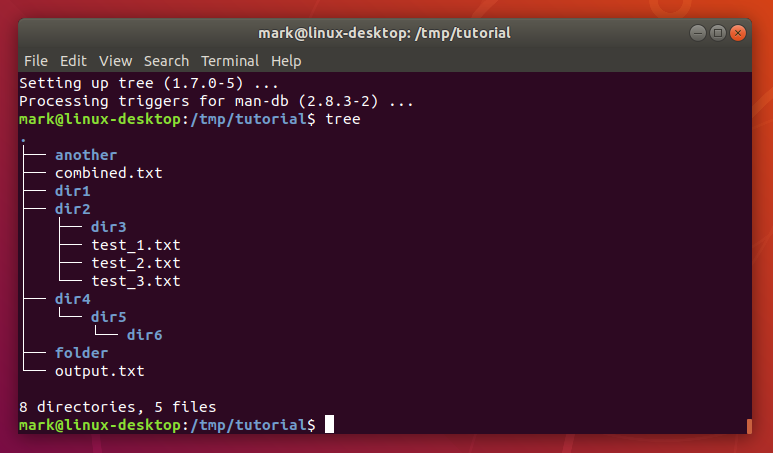

Building Your First Docker Image

A Docker image is a blueprint for a container, defined in a file called a `Dockerfile`. This file contains a series of instructions to build the image layer by layer. Here’s a Dockerfile for a simple Python Flask application, a common task for Python DevOps professionals.

Linux terminal command line – Command-line interface Computer terminal Linux Installation, linux …

# Use an official Python runtime as a parent image

FROM python:3.9-slim

# Set the working directory in the container

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 5000 available to the world outside this container

EXPOSE 5000

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]This simple file encapsulates the entire application environment, making it portable and reproducible across any machine running Docker, from a developer’s laptop to a production server on AWS Linux or Azure Linux.

Managing Containers at Scale with Kubernetes

Running a single container is easy, but managing hundreds or thousands across a cluster of machines is a complex challenge. This is where Kubernetes Linux comes in. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It handles service discovery, load balancing, self-healing, and rolling updates. A core concept in Kubernetes is the declarative manifest, like this example for deploying our Python application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: python-app-deployment

spec:

replicas: 3 # Run 3 instances of our application

selector:

matchLabels:

app: python-app

template:

metadata:

labels:

app: python-app

spec:

containers:

- name: python-app-container

image: your-username/python-flask-app:latest # The image we built

ports:

- containerPort: 5000This manifest tells Kubernetes we want three replicas of our application running at all times. If one fails, Kubernetes will automatically start a new one, providing the resilience required for modern applications.

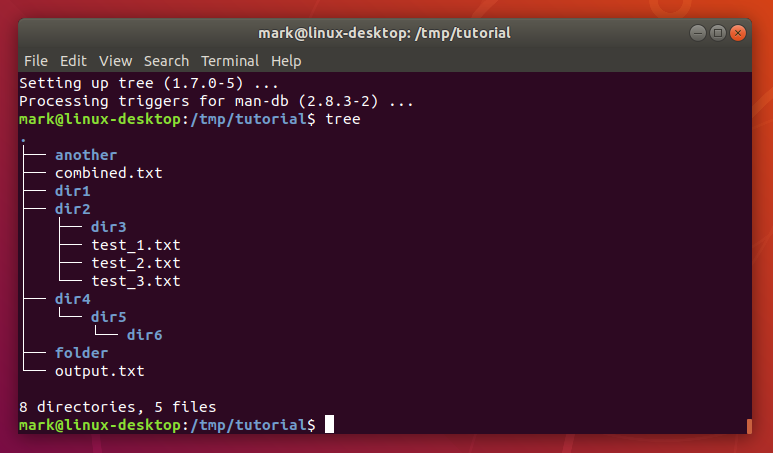

Ensuring Stability and Security: Monitoring and Hardening

Deploying applications is only half the battle. A crucial part of the DevOps lifecycle is ensuring systems are stable, performant, and secure. This involves continuous Performance Monitoring and proactive Linux Security measures.

Linux terminal command line – A Beginner’s Guide to the Linux Command Line | TechSpot

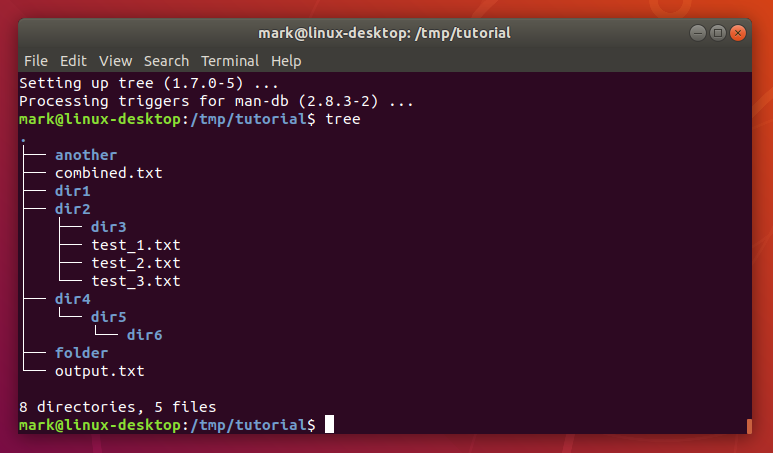

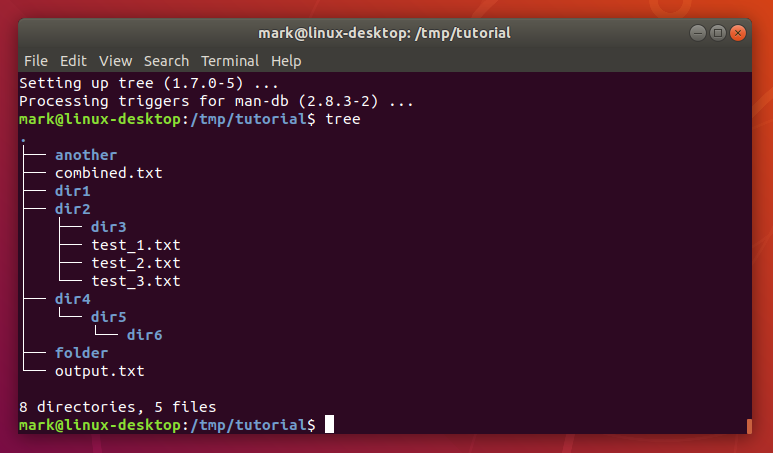

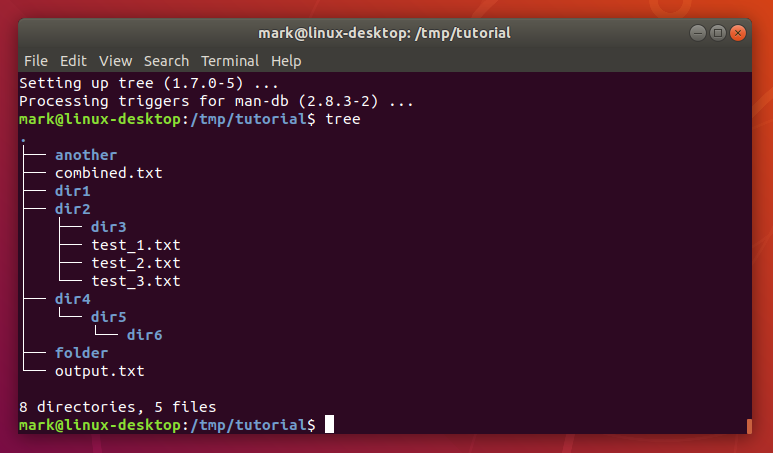

Linux Performance Monitoring

System Monitoring on Linux can range from using simple command-line Linux Utilities like the top command or htop to deploying sophisticated observability stacks like Prometheus and Grafana. The goal is to collect metrics on CPU, memory, disk I/O, and network usage to identify bottlenecks and predict failures before they impact users. For more complex analysis, Python Scripting can be used to parse logs or collect custom metrics.

Essential Linux Security Practices

Securing a Linux Server involves a multi-layered approach. Key practices include:

- User and Permissions Management: Adhering to the principle of least privilege. Use dedicated Linux Users for services and enforce strict File Permissions.

- Firewall Configuration: Use tools like

iptablesorufwto create a robust Linux Firewall, allowing only necessary traffic. - Mandatory Access Control: Leverage systems like SELinux (on Red Hat/CentOS) or AppArmor (on Debian/Ubuntu) to enforce fine-grained security policies.

- Regular Updates: Keep the system and all software patched to protect against known vulnerabilities.

A Python System Admin can write scripts to automate many of these checks. For instance, this Python script checks disk usage and alerts if it exceeds a threshold.

import shutil

import smtplib

from email.mime.text import MIMEText

# Configuration

THRESHOLD_PERCENT = 85.0

PARTITION_TO_CHECK = "/"

SENDER_EMAIL = "alerts@yourdomain.com"

RECEIVER_EMAIL = "admin@yourdomain.com"

SMTP_SERVER = "smtp.yourdomain.com"

def check_disk_usage(partition):

"""Checks the disk usage of a given partition."""

total, used, free = shutil.disk_usage(partition)

percent_used = (used / total) * 100

return percent_used

def send_alert_email(percent_used):

"""Sends an email alert."""

subject = f"Disk Usage Alert: {percent_used:.2f}% on {PARTITION_TO_CHECK}"

body = f"Warning: Disk usage on partition '{PARTITION_TO_CHECK}' has reached {percent_used:.2f}%."

msg = MIMEText(body)

msg['Subject'] = subject

msg['From'] = SENDER_EMAIL

msg['To'] = RECEIVER_EMAIL

with smtplib.SMTP(SMTP_SERVER) as server:

server.send_message(msg)

print("Alert email sent.")

if __name__ == "__main__":

usage = check_disk_usage(PARTITION_TO_CHECK)

print(f"Current disk usage on '{PARTITION_TO_CHECK}': {usage:.2f}%")

if usage > THRESHOLD_PERCENT:

send_alert_email(usage)Conclusion: Your Journey in Linux DevOps

We’ve journeyed from the fundamental power of the Linux command line to the scalable heights of cloud-native orchestration. The key takeaway is that Linux is not merely a component of a DevOps stack; it is the thread that weaves everything together. Mastering Bash Scripting provides the initial building blocks for automation. Tools like Ansible allow you to manage infrastructure as code. Docker and Kubernetes, built upon core Linux Kernel features, enable the agility and resilience demanded by modern applications.

Your path forward in Linux DevOps is one of continuous learning. Set up a lab environment on a virtual machine or a spare computer running a distribution like Fedora Linux or Arch Linux to experiment. Practice writing scripts, build Docker containers for your favorite applications, and explore the vast ecosystems of tools that make Linux the most powerful platform for developers and operations engineers alike. By building a solid foundation in Linux, you are empowering yourself to build, deploy, and manage the next generation of software.