Introduction

In today’s data-driven world, a robust backup and recovery strategy is not just a best practice; it’s a fundamental requirement for survival. For system administrators managing Linux servers—from a single web server running on Debian Linux to a complex Kubernetes cluster on Red Hat Linux—the threat of data loss is ever-present. Hardware failure, accidental deletion, software corruption, and malicious cyberattacks can strike at any moment, making a reliable backup the last line of defense. A well-designed backup strategy ensures business continuity, minimizes downtime, and provides peace of mind.

This comprehensive guide delves into the essential aspects of Linux backup and recovery. We will explore core concepts, from different backup types to the command-line tools that form the backbone of any Linux administration toolkit. We’ll provide practical, real-world examples, including Bash scripting for automation and crucial SQL code snippets for handling database backups. Whether you’re a seasoned Linux DevOps professional or just starting your journey with an Ubuntu tutorial, this article will equip you with the knowledge to build a resilient and secure backup system for your critical infrastructure.

Core Concepts and Foundational Tools

Before diving into complex scripts and automation, it’s crucial to understand the fundamental principles and tools that govern Linux backups. A solid grasp of these concepts allows you to choose the right strategy for your specific needs, balancing factors like recovery time, storage costs, and data consistency.

Backup Types: Full, Incremental, and Differential

Backup strategies are typically built around three primary types, each with distinct advantages and disadvantages:

- Full Backup: This is the simplest type. It creates a complete copy of all selected data. While straightforward to restore, full backups are the most time-consuming and storage-intensive, making them impractical to run frequently.

- Incremental Backup: An incremental backup only copies data that has changed since the last backup of any type (full or incremental). This makes them very fast and space-efficient. However, restoration is more complex, as it requires the last full backup and all subsequent incremental backups in the correct order.

- Differential Backup: A differential backup copies all data that has changed since the last full backup. Each subsequent differential backup grows larger than the previous one. Restoring is simpler than with incrementals, requiring only the last full backup and the latest differential backup.

Essential Linux Backup Utilities

The Linux terminal is home to powerful utilities that have been trusted by system administrators for decades. These tools are scriptable, versatile, and form the building blocks of most backup solutions.

- tar (Tape Archive): The classic utility for bundling multiple files and directories into a single archive file (`.tar`). When combined with compression tools like `gzip` (`.tar.gz`) or `bzip2` (`.tar.bz2`), it becomes an efficient way to store and transfer data.

- rsync (Remote Sync): A highly efficient tool for synchronizing files and directories between two locations, either locally or over a network via Linux SSH. Its key feature is the delta-transfer algorithm, which only sends the differences between files, significantly reducing network traffic.

- dd (Data Duplicator): A low-level utility that copies raw data block by block. It’s incredibly powerful for creating exact disk images or cloning entire partitions. However, it must be used with extreme caution, as a simple typo can wipe out an entire disk.

Here is a practical example of using `tar` to create a compressed full backup of a web server’s document root.

# Create a compressed tarball of the /var/www/html directory

# The backup will be named with the current date for easy identification.

#

# Flags explained:

# -c : Create a new archive.

# -z : Compress the archive with gzip.

# -v : Verbosely list files processed.

# -f : Specifies the filename of the archive.

BACKUP_FILENAME="web_backup_$(date +%Y-%m-%d).tar.gz"

SOURCE_DIR="/var/www/html"

DEST_DIR="/mnt/backups/web"

# Ensure the destination directory exists

mkdir -p "$DEST_DIR"

# Create the backup

tar -czvf "${DEST_DIR}/${BACKUP_FILENAME}" "$SOURCE_DIR"

echo "Backup complete: ${DEST_DIR}/${BACKUP_FILENAME}"Implementing a Practical Backup Strategy

A manual backup is better than no backup, but a truly robust strategy is automated, consistent, and includes all critical system components, especially databases. This section covers how to use shell scripting and database-specific tools to create a reliable, automated workflow.

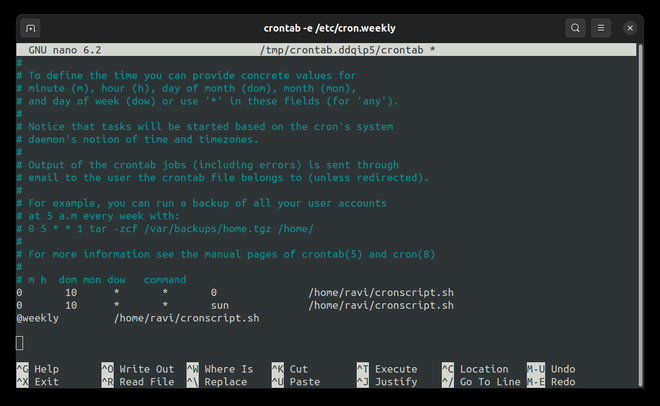

Linux command line backup script – How to Run a Crontab Job Every Week on Sunday – GeeksforGeeks

Automating Backups with Cron and Bash Scripting

The key to consistency is automation. In the Linux world, the cron daemon is the standard scheduler for running repetitive tasks. By combining `rsync` with a simple Bash script and a cron job, you can create a “set it and forget it” remote backup solution.

The following script uses `rsync` to efficiently synchronize a user’s home directory to a remote backup server. It uses SSH for secure transport and includes the `–delete` flag to remove files from the destination if they are deleted from the source, keeping the backup a true mirror.

#!/bin/bash

# A simple Bash script to perform a remote backup using rsync and SSH.

# This script assumes you have set up SSH key-based authentication

# for passwordless login to the remote server.

SOURCE_DIR="/home/myuser"

DEST_USER="backupuser"

DEST_HOST="backupserver.example.com"

DEST_DIR="/backups/myuser_home"

LOG_FILE="/var/log/daily_backup.log"

# Use rsync to synchronize files.

# -a : Archive mode (preserves permissions, ownership, timestamps, etc.)

# -v : Verbose output.

# -z : Compress file data during the transfer.

# --delete : Delete extraneous files from the destination.

# -e 'ssh' : Specify the remote shell to use.

rsync -avz --delete -e 'ssh' "$SOURCE_DIR" "${DEST_USER}@${DEST_HOST}:${DEST_DIR}" >> "$LOG_FILE"

# Log the completion of the backup

echo "Backup for $SOURCE_DIR completed on $(date)" >> "$LOG_FILE"To run this script automatically every day at 2:00 AM, you would add the following line to your crontab by running `crontab -e`:

0 2 * * * /usr/local/bin/remote_backup.sh

Backing Up Linux Databases: PostgreSQL and MySQL

File-level backups of live database directories are notoriously unreliable. A running database is constantly writing to its files, and a simple copy can catch the data in an inconsistent, corrupted state. To create a reliable backup, you must use the database’s native dumping tools, such as `pg_dump` for PostgreSQL Linux or `mysqldump` for MySQL Linux.

These tools connect to the database server and export the data in a consistent state, typically as a large SQL file that can be used to recreate the schema and repopulate the data. The following example demonstrates how to back up a PostgreSQL database.

# Command to dump a PostgreSQL database to a custom-format archive.

# The custom format (-Fc) is compressed and allows for more flexible

# restoration options with pg_restore.

DB_NAME="production_db"

DB_USER="backup_user"

BACKUP_FILE="/mnt/backups/db/${DB_NAME}_$(date +%Y-%m-%d).dump"

# The pg_dump command

# -U : Specifies the database user.

# -W : Prompts for the user's password (for security).

# -F c : Sets the output format to custom (compressed archive).

# -f : Specifies the output file.

pg_dump -U "$DB_USER" -W -F c -f "$BACKUP_FILE" "$DB_NAME"

echo "PostgreSQL backup complete: $BACKUP_FILE"

# To restore this backup, you would first create an empty database

# and then use the pg_restore utility:

# createdb -U postgres new_production_db

# pg_restore -U postgres -d new_production_db "$BACKUP_FILE"Advanced Techniques and Data Consistency

For mission-critical systems, basic backups may not be enough. Advanced techniques are required to ensure data integrity during the backup process and to verify that the restored data is valid and complete. This involves understanding database transactions and leveraging powerful file system features like LVM.

Ensuring Database Consistency with Transactions

Why are tools like `pg_dump` so important? They leverage the database’s transactional engine. When `pg_dump` starts, it typically initiates a `REPEATABLE READ` transaction. This creates a consistent “snapshot” of the database at that exact moment in time. All data exported by `pg_dump` will reflect the state of the database when the transaction began, regardless of any writes, updates, or deletes that occur while the dump is in progress. This guarantees a logically consistent backup without needing to take the database offline.

Practical SQL for Backup Verification

A backup is only as good as its last successful restore. After restoring a database to a staging environment, you must verify its integrity. This involves more than just checking if the restore command completed without errors. You should run a series of SQL queries to validate the schema, check for data, and ensure key structures like indexes are present.

Here are some example PostgreSQL queries you can run on a restored database to perform a basic sanity check.

-- A set of SQL queries to verify a restored PostgreSQL database.

-- 1. Verify that the expected tables exist in the public schema.

-- This checks if the schema structure was restored correctly.

SELECT table_name

FROM information_schema.tables

WHERE table_schema = 'public'

ORDER BY table_name;

-- 2. Perform a data integrity check by counting rows in a critical table.

-- Compare this count to the count from the production database before the backup.

SELECT COUNT(*) AS user_count

FROM users;

-- 3. Check for the existence of indexes on a key table.

-- Missing indexes can severely degrade application performance.

SELECT

indexname,

indexdef

FROM

pg_indexes

WHERE

tablename = 'orders'

ORDER BY

indexname;

-- 4. Check the most recent record in a transactional table.

-- This helps verify that recent data was included in the backup.

SELECT *

FROM transactions

ORDER BY transaction_date DESC

LIMIT 1;Using LVM Snapshots for Live System Backups

For backing up entire file systems that contain actively running services, the Logical Volume Manager (LVM) offers a powerful solution: snapshots. An LVM snapshot creates a point-in-time, copy-on-write, block-level clone of a logical volume. You can mount this snapshot and back it up as if it were a static file system, ensuring all files (including open ones) are in a consistent state. The process is: create the snapshot, mount it, back up the data from the mount point using `rsync` or `tar`, then unmount and remove the snapshot. This minimizes the performance impact on the live system.

Best Practices, Security, and Optimization

Implementing backup tools and scripts is only part of the equation. Following established best practices ensures your backup strategy is resilient, secure, and reliable when you need it most.

The 3-2-1 Backup Rule

This is a cornerstone of data protection strategy. It dictates that you should have:

- 3 copies of your data (your production data and two backups).

- 2 different storage media (e.g., local disk and cloud storage like AWS S3).

- 1 copy located off-site (to protect against physical disasters like fire or flood).

Security Considerations

Backups are a prime target for attackers. If your backups are compromised, recovery becomes impossible. Securing them is non-negotiable.

- Encryption: Always encrypt your backup archives, both at rest and in transit. Use tools like GPG to encrypt `tar` files and rely on `rsync` over SSH to protect data on the wire.

- Access Control: Apply strict Linux permissions to your backup files and scripts. Backup data should only be readable by a dedicated backup user and root. Use `chmod` and `chown` to enforce the principle of least privilege.

- Immutable Storage: For ultimate protection against ransomware, consider using immutable storage options available in cloud environments like AWS Linux (S3 Object Lock).

Testing and Verification

An untested backup is a liability. Regularly schedule full restore drills to a separate, non-production environment. This practice not only verifies the integrity of your backup data but also ensures that your recovery procedures are well-documented and effective. Document the time it takes to restore, as this is your Recovery Time Objective (RTO).

Conclusion

A comprehensive Linux backup strategy is a multi-layered defense built on a foundation of solid concepts, powerful tools, and disciplined processes. We’ve seen how fundamental utilities like `tar` and `rsync`, combined with the automation of Bash scripting and cron, can create a reliable system for file-level backups. For databases like PostgreSQL or MySQL, using native dump tools is critical for ensuring data consistency.

By adopting advanced techniques like LVM snapshots, adhering to the 3-2-1 rule, and prioritizing security through encryption and access control, you can build a system that is resilient to failure and secure from threats. The most important takeaway is that a backup strategy is a living process. It must be regularly tested, monitored, and refined. By investing the time to build and maintain a robust backup and recovery plan, you ensure the long-term stability and integrity of your critical Linux systems.