Introduction to Bash Loops: The Engine of Automation

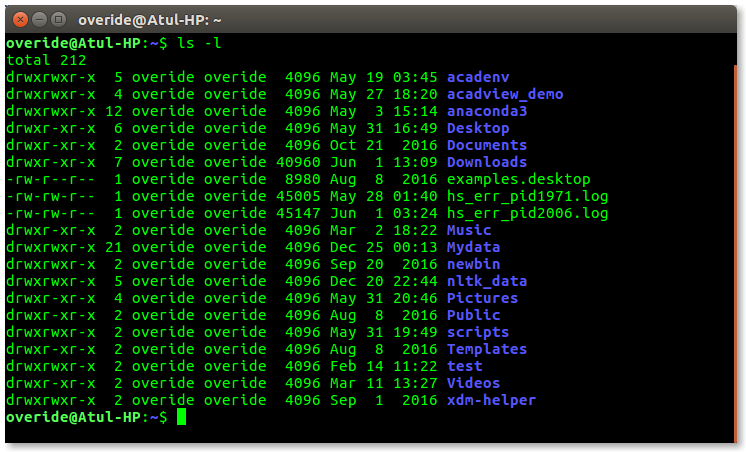

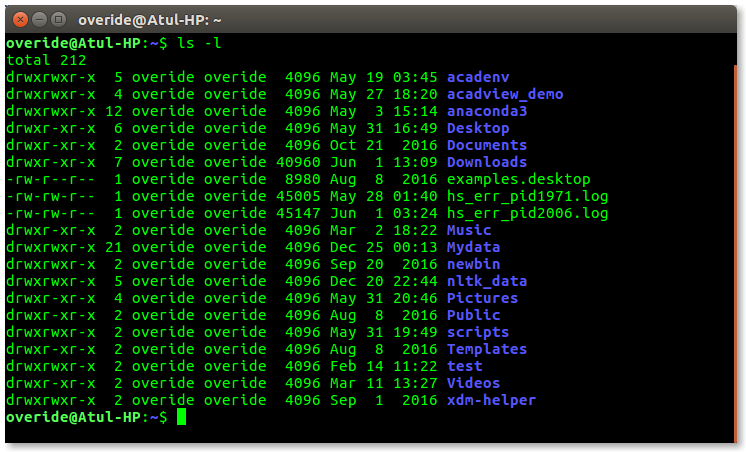

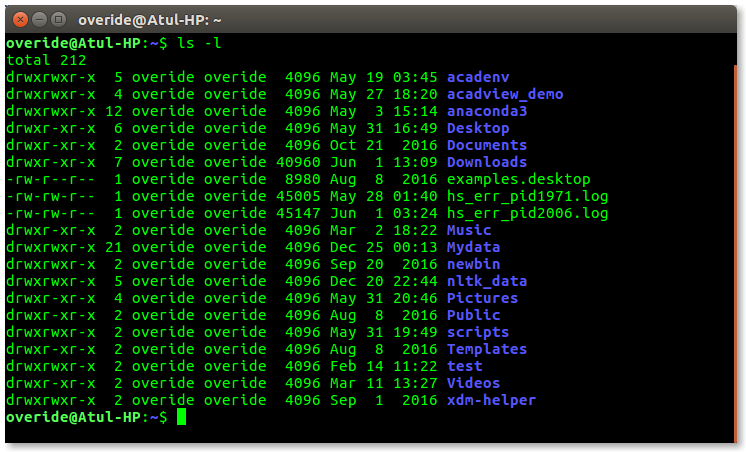

In the world of modern IT, automation is not just a luxury; it’s a necessity. For anyone working with a Linux Server, whether it’s for System Administration, DevOps, or development, the ability to automate repetitive tasks is a critical skill. At the heart of this automation lies Bash scripting, the native language of the Linux Terminal. While simple commands can get you far, the true power of shell scripting is unlocked when you master its control structures, and none are more fundamental than loops. Loops are the engine that drives repetitive actions, allowing you to iterate over lists of files, process lines from a log, check the status of multiple servers, or perform any task that needs to be done more than once.

This comprehensive guide will take you on a deep dive into the world of Bash loops. We will move beyond basic syntax to explore the nuances of different loop types, from the classic for loop to the condition-driven while and until loops, and even the C-style syntax familiar to programmers. We’ll cover essential control statements like break and continue, and demonstrate practical, real-world applications that you can immediately apply to your work on any Linux Distributions, from a Debian Linux desktop to a production Red Hat Linux server. By the end of this article, you’ll have the knowledge to take your Linux Automation skills to the next level.

The Foundations of Iteration: `for` and `while` Loops

Before diving into complex automation, it’s crucial to understand the two primary workhorses of Bash looping: the for loop and the while loop. Each serves a distinct purpose and is suited for different kinds of iterative tasks.

The Classic `for` Loop: Iterating Over a Finite List

The for loop is your go-to tool when you have a known, finite set of items you need to process. This “list” can be a series of strings, filenames, server hostnames, or even numbers. The syntax is straightforward and readable: you define a variable that will take on the value of each item in the list, one by one, for each iteration of the loop.

Consider a common System Administration task: checking the network connectivity of several critical servers. Instead of manually pinging each one, you can automate it with a simple for loop.

#!/bin/bash

# A list of servers to check

servers=("web01.example.com" "db01.example.com" "api.example.com" "nonexistent.host")

echo "--- Starting Server Connectivity Check ---"

for server in "${servers[@]}"; do

echo "Pinging $server..."

# Ping the server 3 times, suppressing normal output

if ping -c 3 "$server" &> /dev/null; then

echo "✅ $server is reachable."

else

echo "❌ $server is UNREACHABLE."

fi

echo "--------------------"

done

echo "--- Server Connectivity Check Complete ---"In this example, the loop iterates through the servers array. For each iteration, the server variable holds the current hostname. The use of "${servers[@]}" is crucial; it ensures that each element of the array is treated as a separate word, even if it contains spaces.

The Condition-Driven `while` Loop

Unlike the for loop, which runs for a fixed number of items, the while loop runs as long as a specific condition is true. This makes it perfect for tasks where you don’t know the number of iterations in advance. It’s often used for reading files line-by-line, waiting for a service to start, or in scripts that require continuous System Monitoring.

A classic Linux DevOps pattern is a deployment script that needs to wait for a database service to become available before proceeding. A while loop is the ideal tool for this job.

#!/bin/bash

# Script to wait for a PostgreSQL database to be ready

MAX_RETRIES=10

RETRY_COUNT=0

echo "Waiting for PostgreSQL database to become available..."

# The loop continues as long as the pg_isready command fails

while ! pg_isready -h localhost -p 5432 -q && [ $RETRY_COUNT -lt $MAX_RETRIES ]; do

echo "Database is not yet available. Retrying in 5 seconds... (Attempt $((RETRY_COUNT+1))/$MAX_RETRIES)"

sleep 5

RETRY_COUNT=$((RETRY_COUNT+1))

done

if [ $RETRY_COUNT -eq $MAX_RETRIES ]; then

echo "❌ Timed out waiting for the database. Please check the PostgreSQL service."

exit 1

else

echo "✅ Database is ready! Proceeding with application startup."

# Add commands to start your application here

fiThis script repeatedly checks the status of a PostgreSQL Linux database. The loop continues as long as the pg_isready command returns a non-zero exit code (indicating failure) and the retry count hasn’t been exceeded. This prevents an infinite loop and provides a robust waiting mechanism.

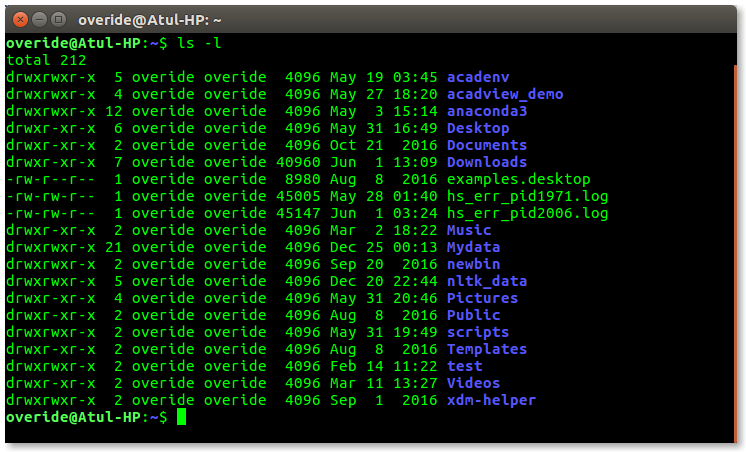

Expanding Your Looping Toolkit

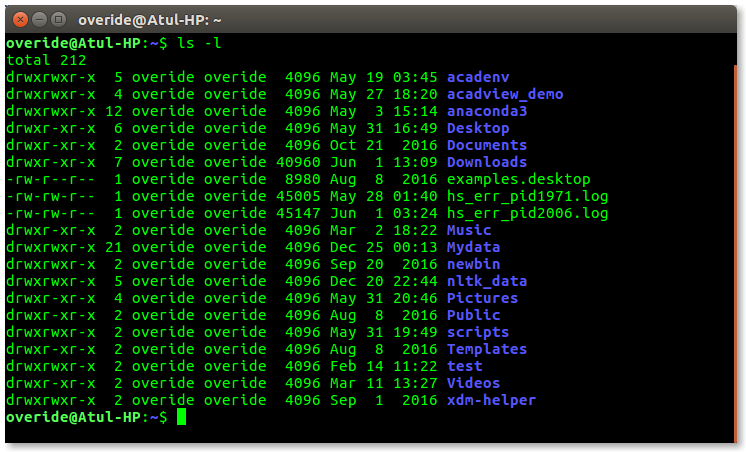

Bash script on computer screen – Bash Script Example: Learn Basic Scripting with Ease

While for and while cover most use cases, Bash offers other looping constructs that provide more flexibility and familiarity for users coming from other programming backgrounds. Mastering these will make your scripts more efficient and readable.

The C-Style `for` Loop: For the Programmers

If you have a background in languages like C, Java, or JavaScript, you’ll feel right at home with Bash’s C-style for loop. It uses the classic three-part structure: an initializer, a condition, and a post-loop expression (typically an increment). This format is ideal for purely numerical iterations where you need to perform an action a specific number of times.

Let’s say you need to perform a quick Linux Backup by creating several numbered backup directories for a project. A C-style loop makes this trivial.

#!/bin/bash

# Create 5 versioned backup directories for a project

PROJECT_NAME="webapp"

BACKUP_BASE_DIR="/mnt/backups/$PROJECT_NAME"

echo "Creating backup directories in $BACKUP_BASE_DIR..."

# Ensure the base directory exists

mkdir -p "$BACKUP_BASE_DIR"

for (( i=1; i<=5; i++ )); do

DIR_NAME="$BACKUP_BASE_DIR/v$i"

if [ -d "$DIR_NAME" ]; then

echo "Directory $DIR_NAME already exists. Skipping."

else

mkdir "$DIR_NAME"

echo "Created directory: $DIR_NAME"

fi

done

echo "Backup directory structure created."The double-parentheses ((...)) syntax enables arithmetic evaluation, making it a clean and efficient way to handle numerical loops without needing external commands like seq or expr.

Looping Through Files and Command Output

One of the most powerful applications of loops in Shell Scripting is processing text data, either from a file or from the output of another command. The standard and safest way to read a file line by line is with a while read loop.

Imagine you have a text file, users.txt, containing a list of usernames you need to add to your system. This script demonstrates how to read that file and create each user, a common task in managing Linux Users and File Permissions.

#!/bin/bash

# Script to create users from a list in a file

USER_FILE="users.txt"

if [ ! -f "$USER_FILE" ]; then

echo "Error: User file '$USER_FILE' not found."

exit 1

fi

echo "--- Starting User Creation Process ---"

# The '-r' option prevents backslash interpretation

# The '|| [[ -n "$line" ]]' handles the case of a file not ending with a newline

while IFS= read -r line || [[ -n "$line" ]]; do

# Skip empty lines or lines that start with a # (comments)

if [[ -z "$line" ]] || [[ "$line" == \#* ]]; then

continue

fi

username=$(echo "$line" | xargs) # Trim whitespace

if id "$username" &>/dev/null; then

echo "User '$username' already exists. Skipping."

else

echo "Creating user '$username'..."

# Use useradd with sensible defaults. Sudo is needed to run this.

sudo useradd -m -s /bin/bash "$username"

if [ $? -eq 0 ]; then

echo "✅ Successfully created user '$username'."

else

echo "❌ Failed to create user '$username'."

fi

fi

done < "$USER_FILE"

echo "--- User Creation Process Finished ---"This pattern is extremely robust. IFS= prevents leading/trailing whitespace from being trimmed, and -r prevents backslash escapes from being processed. The input redirection < "$USER_FILE" at the end of the done keyword feeds the file into the loop. The same logic can be used to process command output by piping it, for example: df -h | while read line; do ...; done.

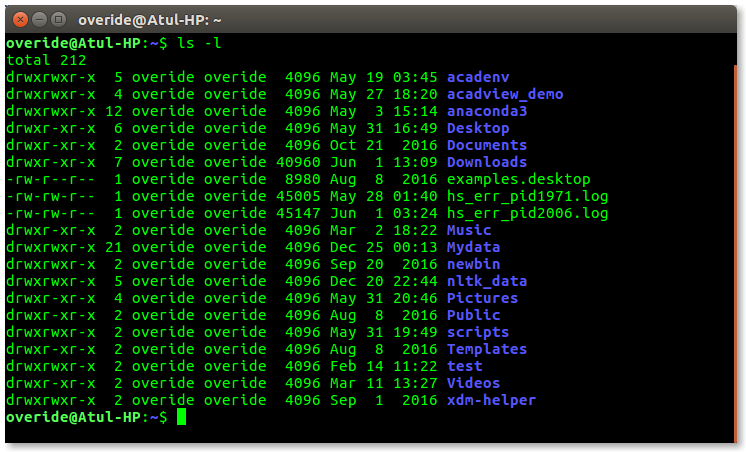

Advanced Loop Control and Complex Scenarios

Once you're comfortable with the basic loop structures, you can begin to introduce more sophisticated logic to handle complex situations. This includes controlling the flow of your loops and nesting them to perform multi-dimensional tasks.

Controlling Flow: `break` and `continue`

Sometimes you don't want a loop to run to completion. Bash provides two commands for fine-grained control over loop execution:

break: Immediately terminates the loop it is in. Execution continues with the first command after thedonekeyword.continue: Immediately skips the rest of the commands in the current iteration and starts the next iteration of the loop.

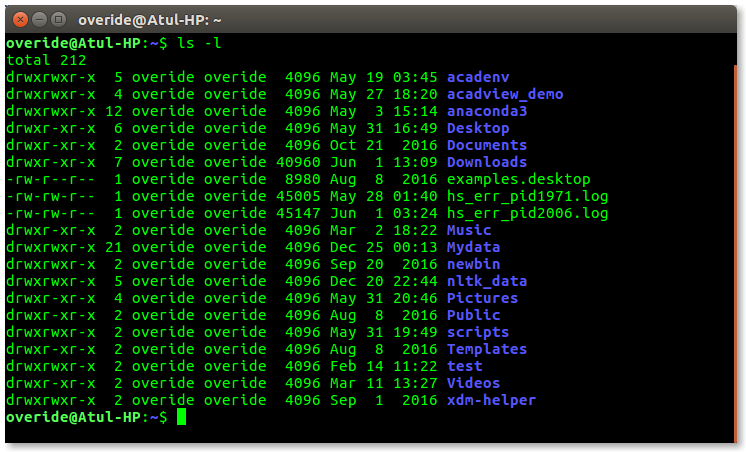

These are invaluable for error handling or filtering data. For example, in a Linux Security context, you might scan an authentication log for the first sign of a brute-force attack and then stop.

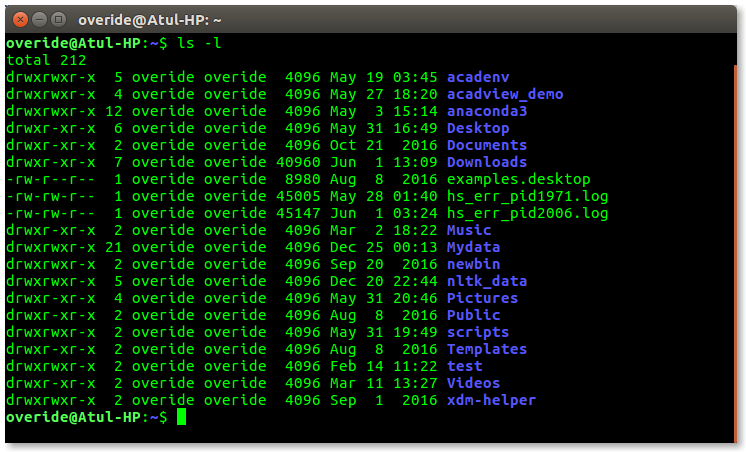

Bash script on computer screen - For Loop in Shell Scripting | How for loop works in shell scripting?

Nested Loops: Iteration Within Iteration

Nested loops are simply loops inside other loops. They are perfect for situations where you need to perform an action on every combination of items from two different lists. While this can be a precursor to more powerful Linux Automation tools like Ansible, it's a powerful concept to master in Bash itself.

Let's combine these advanced concepts. The following script iterates through a list of web servers (perhaps running Nginx or Apache) and checks a list of critical processes on each one via Linux SSH. It uses a nested loop and control statements for robust checking.

#!/bin/bash

# An advanced script using nested loops and control flow

# to check services on multiple remote servers.

servers=("web01.example.com" "web02.example.com" "db01.example.com")

services_to_check=("nginx" "sshd" "fail2ban")

db_services=("postgresql")

for server in "${servers[@]}"; do

echo "--- Connecting to $server ---"

# Use a quick check to see if the server is even online.

# If not, skip to the next server using 'continue'.

if ! ssh -o ConnectTimeout=5 "$server" exit &>/dev/null; then

echo "❌ Cannot connect to $server. Skipping."

echo "---------------------------"

continue

fi

# Check for web services

for service in "${services_to_check[@]}"; do

echo "Checking for service: $service on $server..."

# Execute remote command via SSH

if ssh "$server" "systemctl is-active --quiet $service"; then

echo "✅ Service '$service' is running."

else

echo "⚠️ Service '$service' is NOT running on $server!"

fi

done

# Special check only for the database server

if [[ "$server" == "db01.example.com" ]]; then

echo "Performing special database checks on $server..."

for db_service in "${db_services[@]}"; do

if ssh "$server" "systemctl is-active --quiet $db_service"; then

echo "✅ DB Service '$db_service' is active."

else

echo "🚨 CRITICAL: DB Service '$db_service' is DOWN on $server!"

# This is a critical failure, we could choose to exit the entire script.

# For this example, we'll just break out of the inner db loop.

break

fi

done

fi

echo "---------------------------"

done

echo "All checks complete."Best Practices, Pitfalls, and Performance

Writing functional loops is one thing; writing robust, efficient, and maintainable loops is another. Adhering to best practices will save you from countless hours of debugging and make your scripts more reliable, especially in production environments like a Kubernetes Linux cluster or a fleet of AWS Linux instances.

Always Quote Your Variables

This is the single most common pitfall for new Bash scripters. Filenames and variable content can contain spaces, tabs, or other special characters. If you don't enclose your variable expansions in double quotes (e.g., "$filename"), the shell will perform word splitting, leading to unexpected and often disastrous results. Make it a habit to quote every variable expansion unless you have a specific reason not to.

Avoid Parsing the Output of `ls`

It's tempting to write for file in $(ls), but this is fragile and unsafe. The output of ls is designed for human eyes, not for scripts, and it will break on filenames with spaces or special characters. A much safer way to iterate over files is to use the shell's built-in globbing:

for file in /path/to/files/*.log; do ... done

For more complex file finding, use the find command piped to a while read loop, preferably with null delimiters for maximum safety: find . -type f -print0 | while IFS= read -r -d '' file; do ... done.

Know When to Use a Different Tool

Bash loops are incredibly useful, but they can be slow. Shell scripts interpret commands line by line, and forking a new process inside a loop (e.g., calling grep, awk, or sed on every iteration) can be a significant performance bottleneck when processing thousands of items. For heavy data processing, consider using tools built for that purpose, like awk or sed, which can process a whole file in a single pass. For complex logic, data structures, and better performance, it might be time to switch to a more powerful scripting language like Python. Python Scripting is a cornerstone of modern Python DevOps and Python System Admin roles for exactly this reason.

Conclusion: From Repetition to Reliable Automation

We've journeyed through the essential looping constructs of Bash, from the fundamental for and while loops to the more specialized C-style and until variants. We've seen how to process files and command output, control loop execution with break and continue, and structure complex tasks with nested loops. Most importantly, we've highlighted the best practices—like quoting variables and using safe file iteration patterns—that separate fragile scripts from robust automation.

Mastering loops is a transformative step in your journey with the Linux command line. It elevates you from simply running commands to orchestrating complex workflows that can manage users, monitor services, deploy applications, and secure systems. Your next steps should be to integrate these looping techniques into functions for reusable code, learn to parse command-line arguments to make your scripts more flexible, and schedule them with `cron` for true, unattended Linux Automation. Whether you're managing a single server or a cloud fleet, these skills are the foundation of efficient and powerful System Administration.