In the modern landscape of infrastructure management, system monitoring is not merely a task—it is the lifeline of digital operations. Whether you are managing a single Linux Server or orchestrating a complex fleet across AWS Linux and Azure Linux environments, the ability to visualize performance, detect anomalies, and react to outages in real-time is paramount. A robust monitoring strategy shifts the paradigm from reactive firefighting to proactive stability management.

While enterprise-grade tools like Prometheus, Grafana, or Datadog are industry standards, understanding the underlying mechanics of System Monitoring is essential for any System Administration professional. By building custom monitoring solutions using Bash Scripting and Python Automation, engineers gain granular control over their metrics and a deeper understanding of the Linux Kernel. This article explores how to construct a comprehensive monitoring system, covering resource usage, network connectivity, and statistical anomaly detection, suitable for distributions ranging from Ubuntu Tutorial environments to enterprise Red Hat Linux systems.

The Foundation: Native Linux Monitoring and Shell Scripting

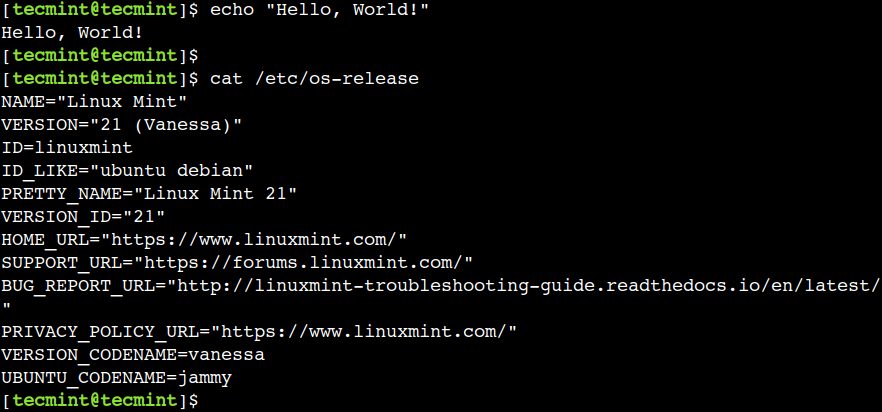

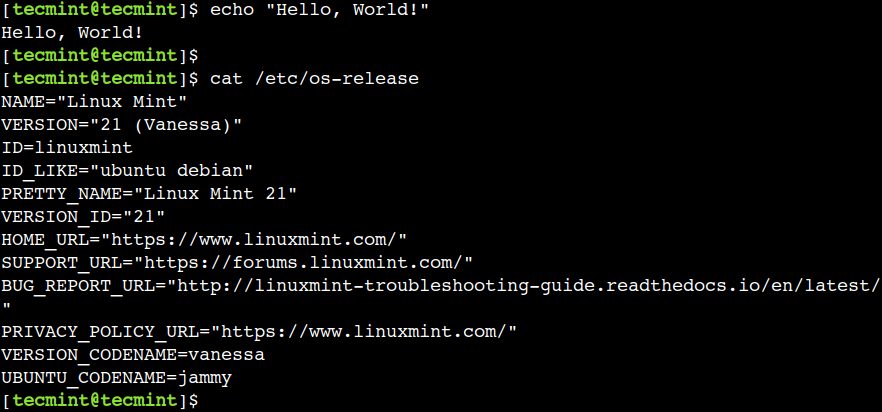

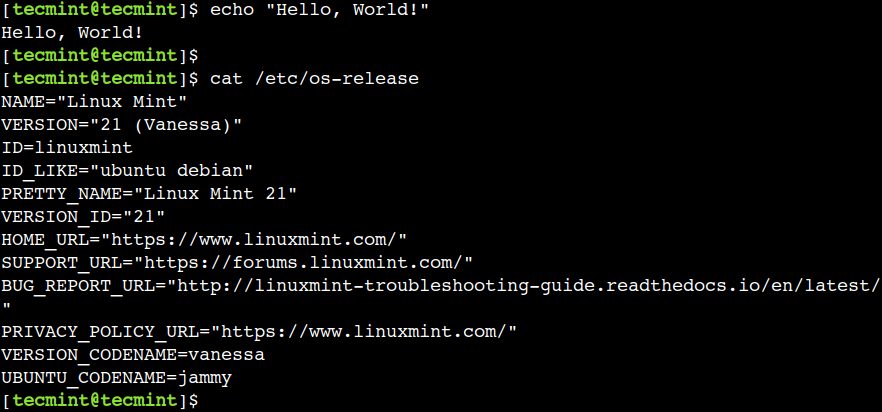

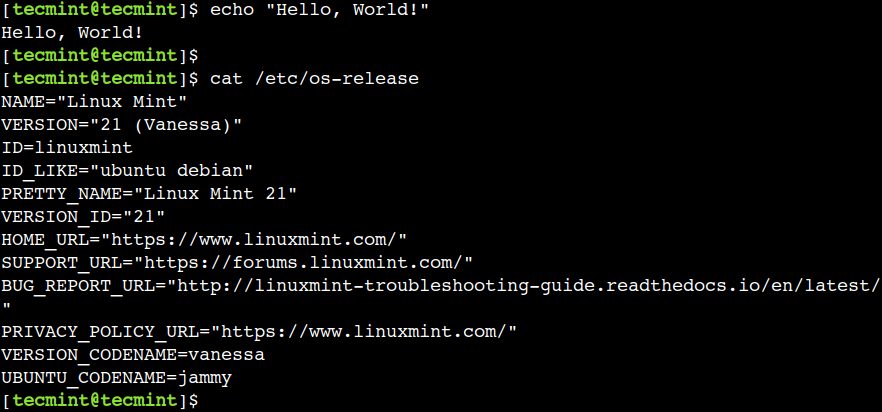

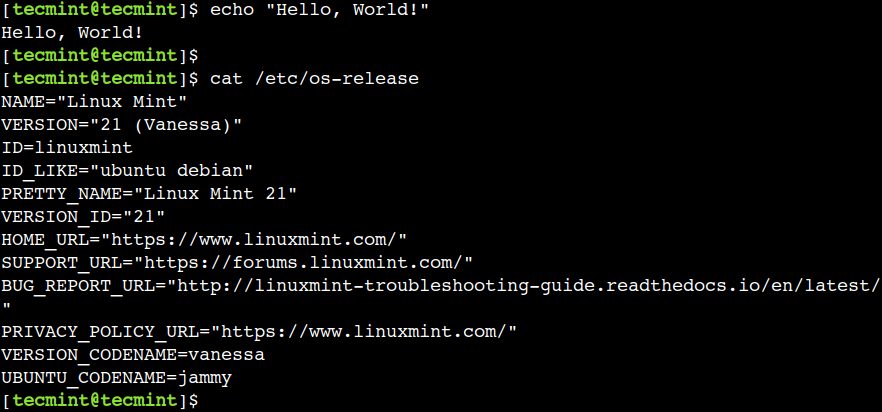

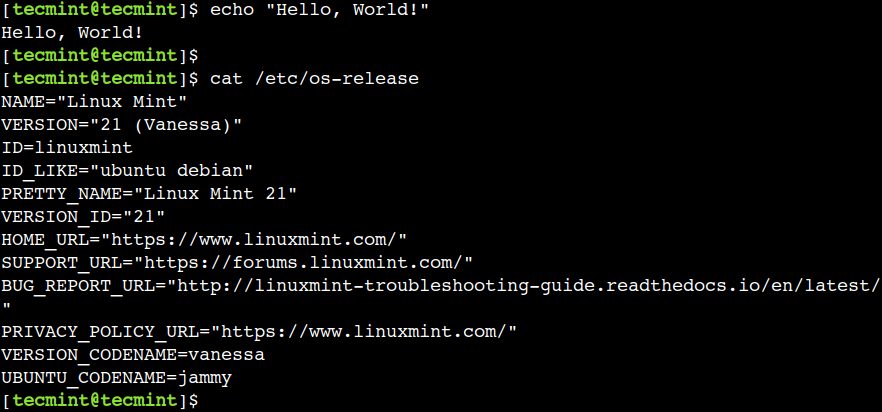

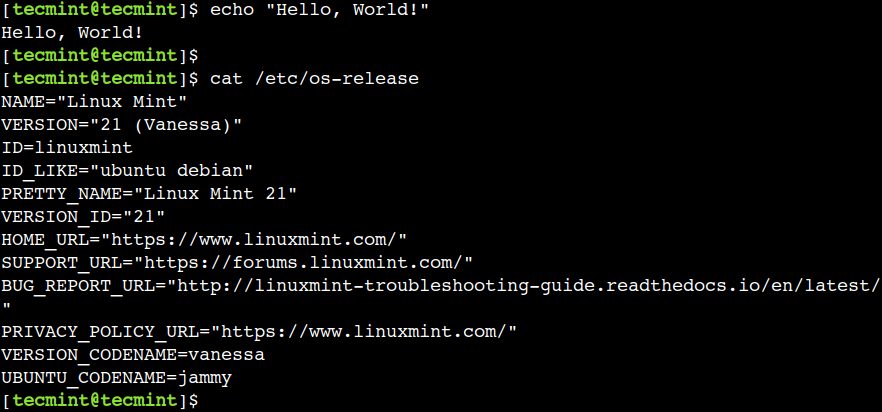

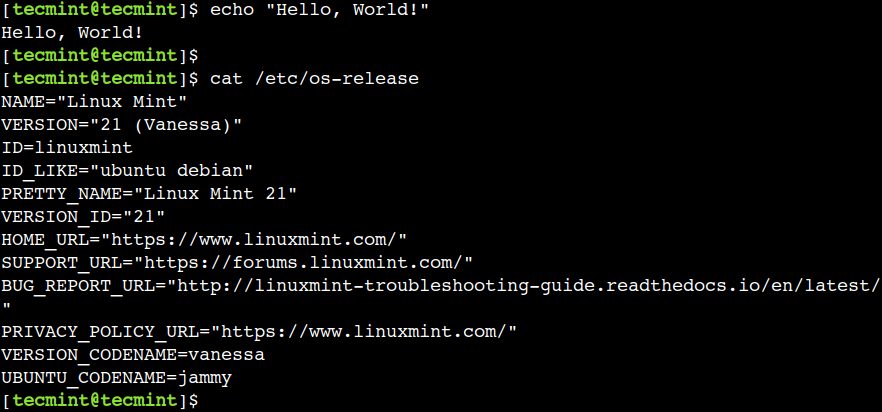

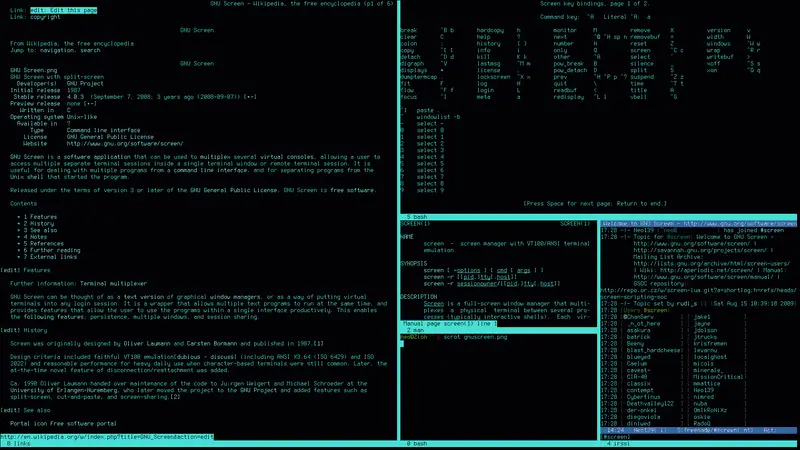

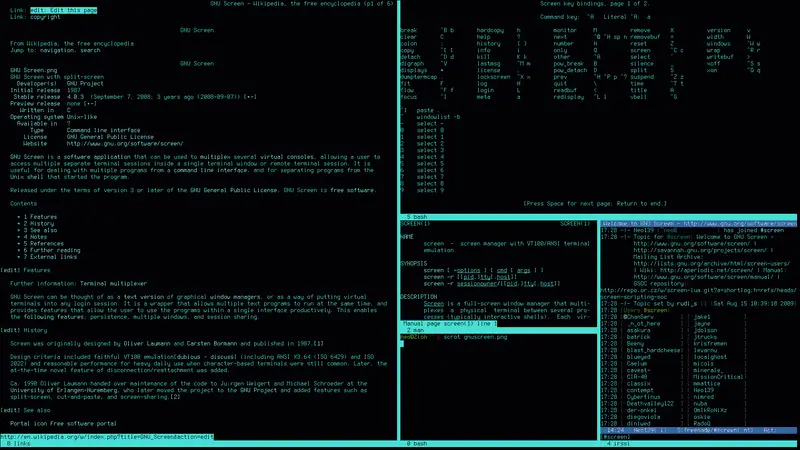

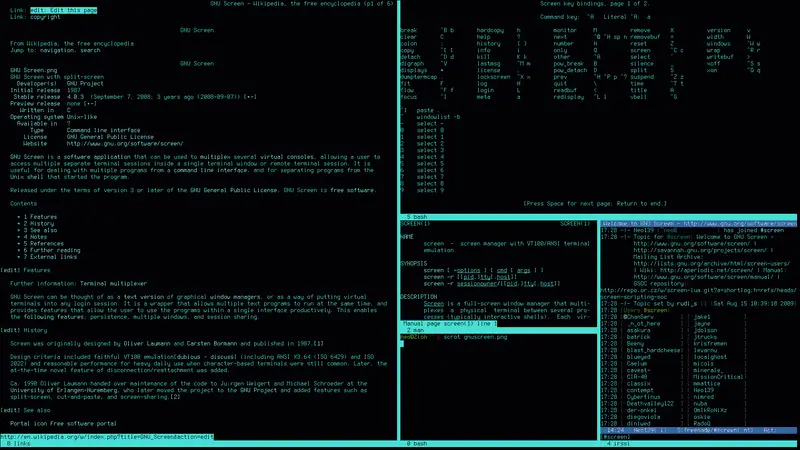

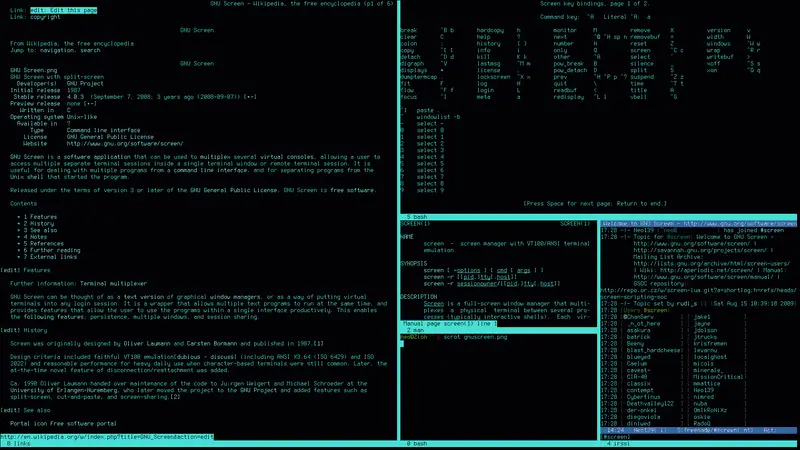

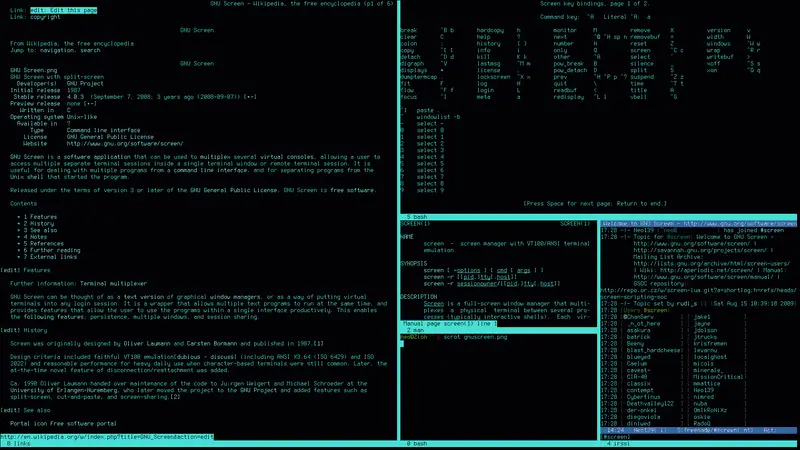

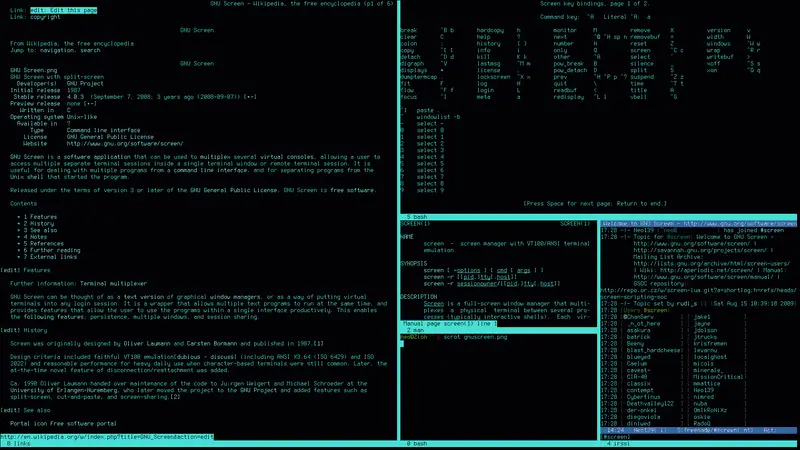

Before diving into complex programming, one must master the native Linux Utilities available in the Linux Terminal. Every Linux DevOps engineer should be comfortable with the /proc file system, which serves as the interface to the kernel’s internal data structures. Tools like the top command and its more visual cousin, htop, read from these directories to display CPU and memory usage.

However, manual observation via Vim Editor or Tmux sessions is not scalable. We need automation. Shell Scripting provides the quickest route to basic monitoring. A simple Bash script can act as a watchdog for disk space, a critical metric often overlooked until a Linux Database like PostgreSQL Linux or MySQL Linux fails to write data.

Below is a robust Bash script designed to monitor disk usage and memory availability. It utilizes standard Linux Commands and can be scheduled via Cron on Debian Linux, CentOS, or Arch Linux.

#!/bin/bash

# System Resource Monitor

# This script checks for high disk usage and low memory

THRESHOLD_DISK=85

THRESHOLD_MEM=90

HOSTNAME=$(hostname)

# Check Disk Usage

# df -h output parsing to get the percentage of root partition

CURRENT_DISK=$(df / | grep / | awk '{ print $5 }' | sed 's/%//g')

if [ "$CURRENT_DISK" -gt "$THRESHOLD_DISK" ]; then

echo "CRITICAL: Disk usage on $HOSTNAME is at ${CURRENT_DISK}%"

# In a real scenario, pipe this to mail or a logging service

logger "SystemMonitor: High Disk Usage Detected: ${CURRENT_DISK}%"

fi

# Check Memory Usage

# Free command parsing

TOTAL_MEM=$(free | grep Mem: | awk '{print $2}')

USED_MEM=$(free | grep Mem: | awk '{print $3}')

MEM_PERCENT=$(( 100 * USED_MEM / TOTAL_MEM ))

if [ "$MEM_PERCENT" -gt "$THRESHOLD_MEM" ]; then

echo "WARNING: Memory usage on $HOSTNAME is at ${MEM_PERCENT}%"

logger "SystemMonitor: High Memory Usage Detected: ${MEM_PERCENT}%"

else

echo "OK: System metrics within normal limits."

fiThis script interacts with the Linux File System and logs alerts to the system journal. While effective for local checks, scaling this requires more logic and better data handling, which brings us to Python Scripting.

Leveling Up: Python Automation for Resource Intelligence

Python Linux integration is a superpower for administrators. Unlike Bash, Python offers extensive libraries for data processing, network socket manipulation, and API interactions. For Performance Monitoring, the psutil library is the gold standard. It provides a cross-platform interface for retrieving information on running processes and system utilization (CPU, memory, disks, network, sensors).

In a Linux Cloud environment, you might need to monitor specific services, such as an Apache or Nginx Linux Web Server. The following Python script demonstrates how to create a structured monitor that checks system load and verifies if critical services are running. This approach is foundational for Python System Admin tasks and can be easily extended to push metrics to a dashboard.

import psutil

import socket

import time

import logging

# Configure logging

logging.basicConfig(

filename='/var/log/python_monitor.log',

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

def check_cpu_load(threshold=80.0):

"""Monitors CPU usage over a 5-second interval."""

cpu_usage = psutil.cpu_percent(interval=5)

if cpu_usage > threshold:

logging.warning(f"High CPU Load Detected: {cpu_usage}%")

return False

return True

def check_service_port(host, port, service_name):

"""Checks if a specific network port is open (e.g., Web Server, SSH)."""

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sock.settimeout(3)

try:

result = sock.connect_ex((host, port))

if result == 0:

logging.info(f"Service {service_name} on port {port} is UP.")

return True

else:

logging.error(f"Service {service_name} on port {port} is DOWN.")

return False

except Exception as e:

logging.error(f"Error checking {service_name}: {e}")

return False

finally:

sock.close()

def system_audit():

"""Performs a full system health check."""

logging.info("Starting System Audit...")

# Resource Checks

check_cpu_load()

# Memory Checks

memory = psutil.virtual_memory()

if memory.percent > 90:

logging.critical(f"Memory Critical: {memory.percent}% used")

# Service Checks (e.g., SSH and HTTP)

check_service_port('127.0.0.1', 22, 'SSH')

check_service_port('127.0.0.1', 80, 'Nginx/Apache')

if __name__ == "__main__":

try:

while True:

system_audit()

# Wait for 60 seconds before next check

time.sleep(60)

except KeyboardInterrupt:

print("Monitoring stopped by user.")This script introduces the concept of a daemonized monitor. It checks Linux Networking ports to ensure services like Linux SSH are accessible. This is crucial for maintaining uptime on a Fedora Linux or Ubuntu Tutorial server. By logging to a file, you create an audit trail that can be parsed later for historical analysis.

Advanced Techniques: Statistical Anomaly Detection

Static thresholds (e.g., “Alert if CPU > 90%”) are often insufficient. They lead to false positives during scheduled backups (Linux Backup) or routine maintenance. A sophisticated monitoring system employs statistical anomaly detection. This involves calculating the baseline performance (mean) and identifying deviations (standard deviation/Z-score). This is particularly useful in Linux Security and Linux Firewall monitoring to detect DDoS attacks or unauthorized access attempts.

If you are running Linux Docker containers or orchestrating with Kubernetes Linux, traffic patterns can be erratic. Implementing a Z-score algorithm allows the system to understand “normal” behavior dynamically. The following example demonstrates how to implement a basic anomaly detector using Python, which could be applied to network latency or request rates.

import math

from collections import deque

class AnomalyDetector:

def __init__(self, window_size=50):

# Store the last N data points

self.data_window = deque(maxlen=window_size)

def add_data_point(self, value):

self.data_window.append(value)

def is_anomaly(self, value, threshold=3):

"""

Detects if the value is an anomaly using Z-Score.

Threshold of 3 means 3 standard deviations from the mean.

"""

if len(self.data_window) < 10:

# Not enough data to establish a baseline

return False

mean = sum(self.data_window) / len(self.data_window)

# Calculate Variance

variance = sum([((x - mean) ** 2) for x in self.data_window]) / len(self.data_window)

std_dev = math.sqrt(variance)

if std_dev == 0:

return False

z_score = (value - mean) / std_dev

if abs(z_score) > threshold:

return True, z_score

return False, z_score

# Example Usage Simulation

if __name__ == "__main__":

import random

detector = AnomalyDetector()

print("Training baseline with normal traffic (approx 100ms latency)...")

# Simulate normal network latency

for _ in range(50):

normal_latency = random.randint(90, 110)

detector.add_data_point(normal_latency)

print("Baseline established. Injecting anomaly...")

# Simulate a network spike

spike_value = 350

is_anom, score = detector.is_anomaly(spike_value)

if is_anom:

print(f"ALERT: Anomaly Detected! Value: {spike_value}, Z-Score: {score:.2f}")

else:

print(f"Value {spike_value} is within normal parameters.")This approach transforms your monitoring from a simple “check” to an intelligent system capable of recognizing outliers. Whether you are monitoring iptables logs for dropped packets or analyzing Linux Disk Management I/O wait times, statistical methods reduce alert fatigue significantly.

Best Practices and System Optimization

Security and Permissions

When implementing monitoring scripts, strictly adhere to the principle of least privilege. Do not run monitoring scripts as the root user unless absolutely necessary. Manage Linux Users and File Permissions carefully using chmod and chown. If a script requires elevated privileges (e.g., to check SELinux status or specific LVM configurations), configure sudoers to allow only that specific command.

Infrastructure as Code

Avoid manually copying scripts to servers. Use Ansible or similar Linux Automation tools to deploy your monitoring agents. This ensures consistency across your Container Linux and bare-metal environments. An Ansible playbook can ensure dependencies like python3-psutil are installed and that your systemd service files are correctly configured.

Log Management and Rotation

Monitoring generates data. Without proper management, your logs can consume the very disk space you are monitoring. Utilize logrotate on Linux Distributions to compress and archive old logs. Ensure your scripts write to standard locations like /var/log/ and integrate with system logging daemons.

Conclusion

Building a comprehensive system monitoring solution is a journey that spans from basic Linux Commands to advanced Python DevOps programming. By leveraging the power of the Linux Terminal, understanding the Linux Kernel metrics, and applying statistical analysis, administrators can build resilient systems that withstand the demands of modern computing.

Whether you are a beginner looking for a Linux Tutorial or an expert refining your C Programming Linux skills for low-level optimization, the principles remain the same: visibility is key. Start with simple Bash scripts, evolve to Python automation, and eventually integrate with larger platforms. The goal is to create a self-healing, self-reporting infrastructure that allows you to sleep soundly, knowing your systems are watching themselves.