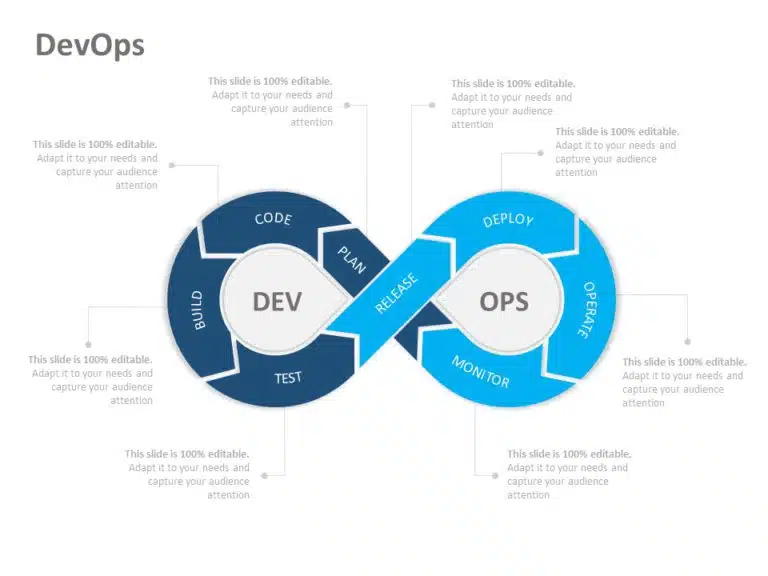

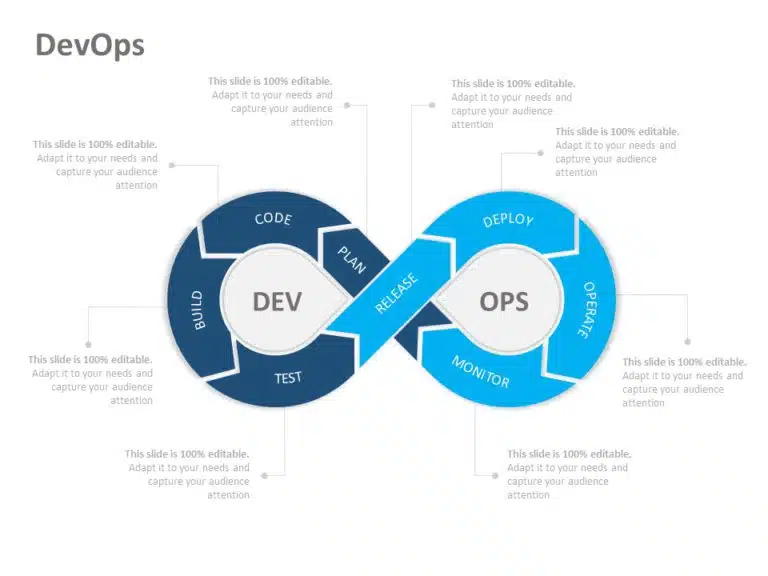

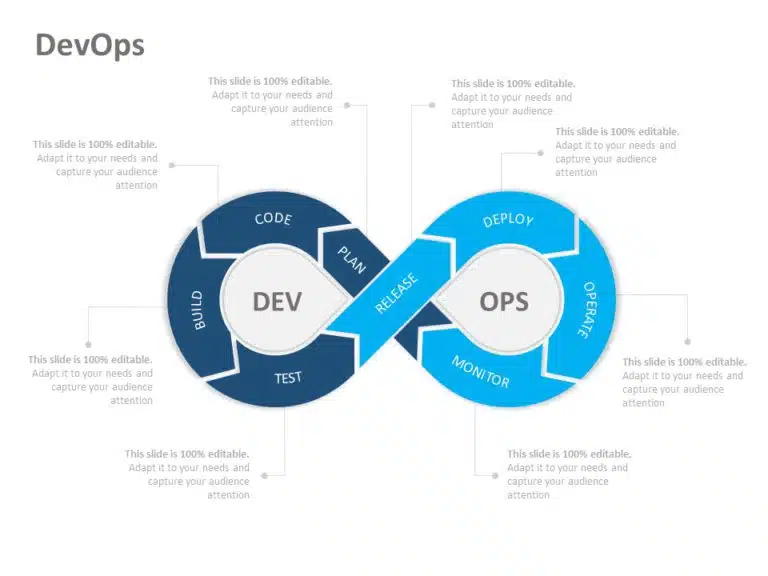

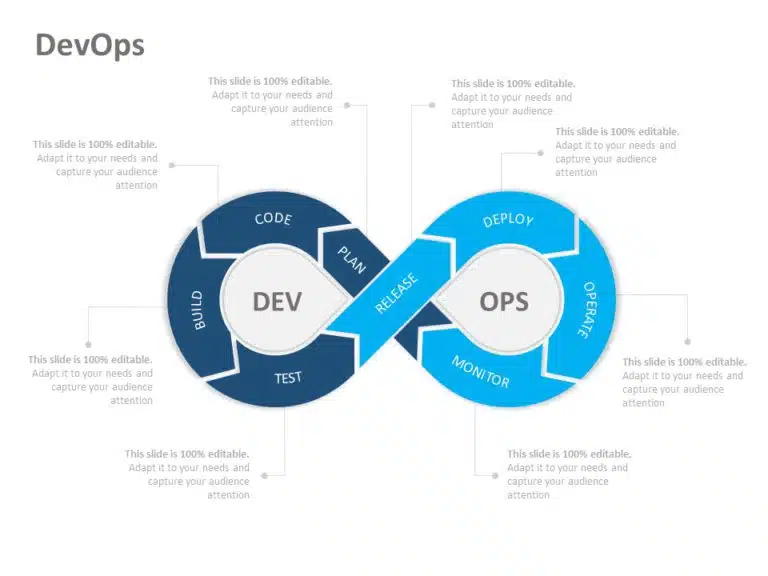

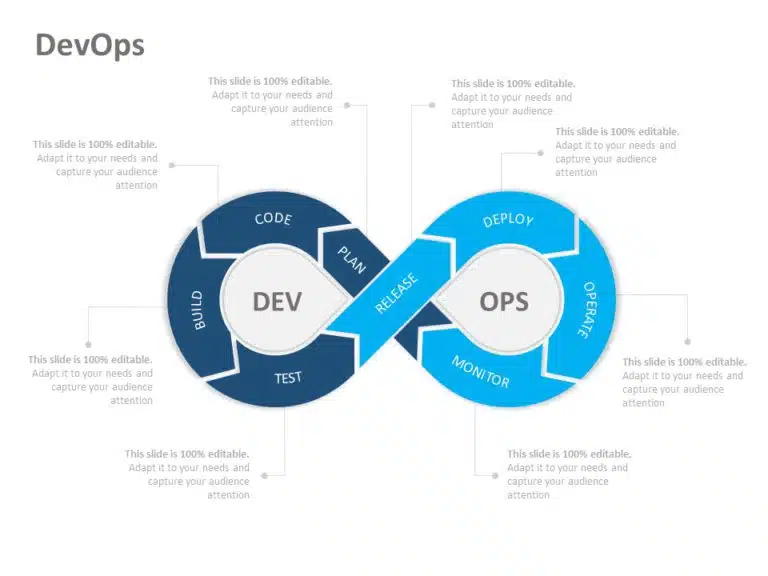

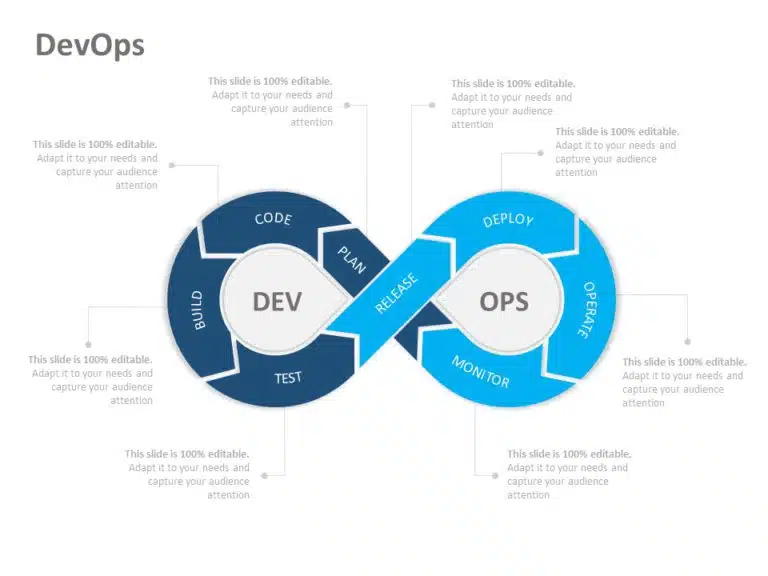

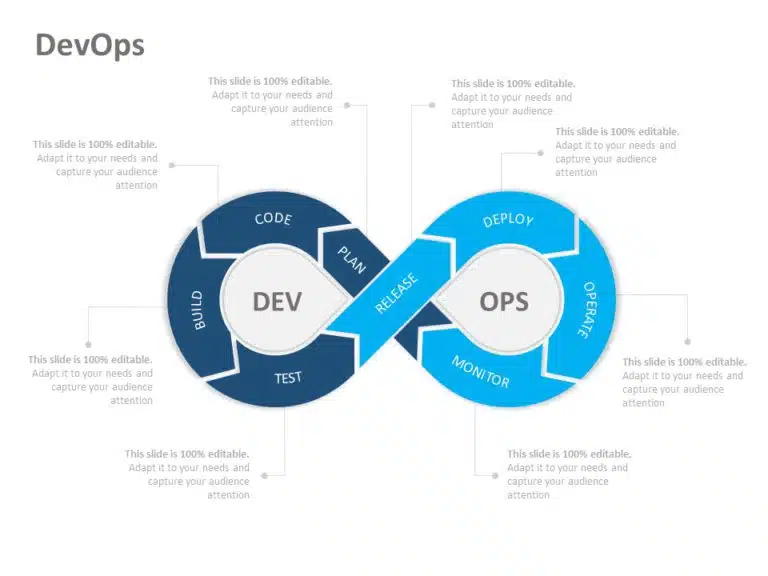

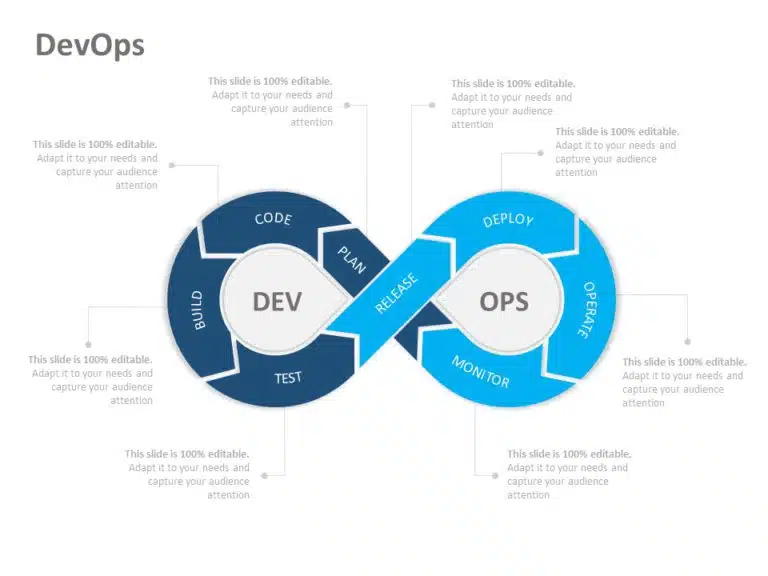

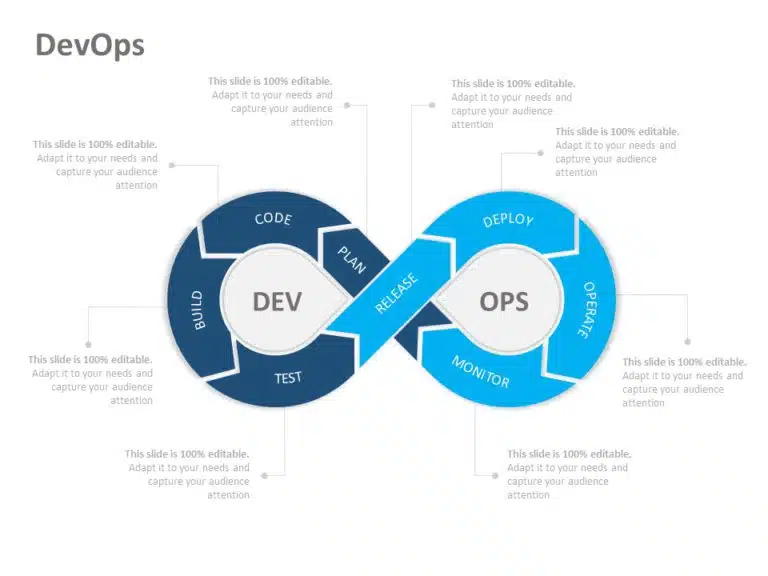

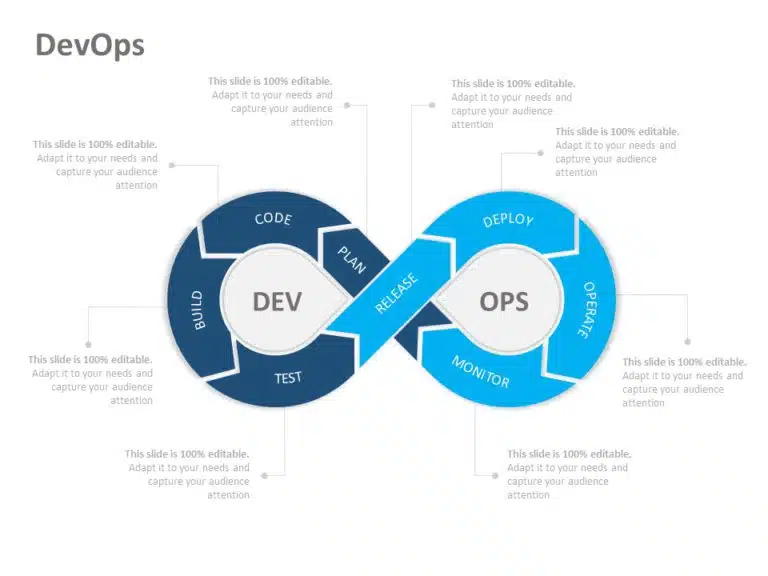

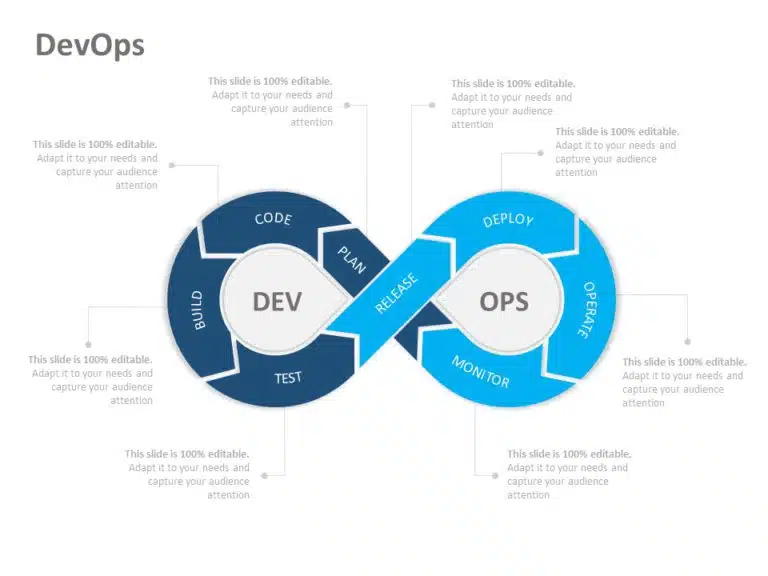

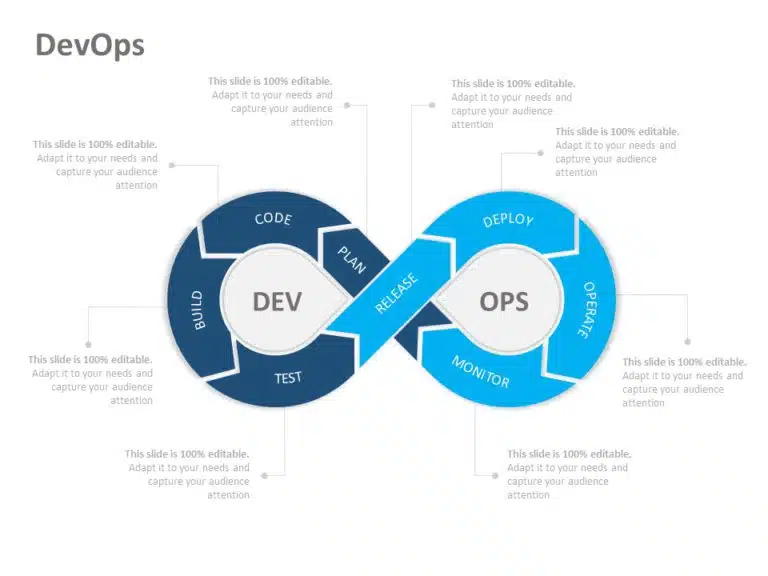

In the modern landscape of software development and IT operations, DevOps has emerged as a critical methodology for delivering applications and services at high velocity. At the heart of this movement lies automation, and for countless engineers, Python has become the language of choice. Its simple syntax, vast ecosystem of libraries, and cross-platform compatibility make it the perfect “Swiss Army knife” for everything from simple system administration scripts to complex cloud orchestration. This is where Python DevOps truly shines, bridging the gap between development and operations with clean, maintainable, and powerful code.

Whether you are a seasoned system administrator working with a Linux Server or a developer looking to streamline your deployment pipelines, Python offers the tools to automate repetitive tasks, manage infrastructure as code, and build robust CI/CD workflows. This article provides a comprehensive guide to leveraging Python for DevOps, covering foundational scripting, infrastructure automation, cloud management, and best practices. We will explore practical, real-world examples that demonstrate how Python can transform your operational efficiency and empower you to build more resilient systems on any Linux Distributions, from Ubuntu Tutorial examples to enterprise environments using Red Hat Linux.

The Foundation: Python Scripting for System Administration

For decades, Bash Scripting and Shell Scripting have been the cornerstones of Linux Administration. While powerful for simple command-line operations, they can become cumbersome and error-prone when dealing with complex logic, data structures, and API interactions. This is where Python steps in as a superior alternative for sophisticated Python System Admin tasks.

Beyond Bash Scripting

Python offers several advantages over traditional shell scripts. Its robust error handling with try...except blocks makes scripts more resilient. It has native support for data formats like JSON and YAML, which are ubiquitous in modern configuration files and API responses. Furthermore, Python’s extensive standard library and third-party packages on PyPI provide pre-built solutions for almost any problem, from interacting with a PostgreSQL Linux database to making HTTP requests to a web service. This allows a DevOps engineer to focus on the logic rather than reinventing the wheel.

Practical Example: Automating Log File Analysis

A common task for any system administrator is parsing log files from a Linux Web Server like Apache or Nginx to identify issues. Imagine you need to quickly find all “404 Not Found” errors from an access log to identify broken links. While you could use a combination of grep and awk in the Linux Terminal, a Python script offers a more readable and extensible solution.

This script demonstrates how to parse a log file, use regular expressions to extract relevant information, and count the occurrences of 404 errors, providing a clean summary.

import re

from collections import Counter

import os

def analyze_nginx_logs(log_file_path):

"""

Parses an Nginx log file to find and count 404 errors.

Args:

log_file_path (str): The full path to the Nginx access log.

"""

if not os.path.exists(log_file_path):

print(f"Error: Log file not found at {log_file_path}")

return

# Regex to capture the requested path and the 404 status code

# Example log line: 127.0.0.1 - - [10/Oct/2023:13:55:36 +0000] "GET /non-existent-page HTTP/1.1" 404 153 "-" "curl/7.81.0"

log_pattern = re.compile(r'"GET\s(.*?)\sHTTP.*?"\s404')

not_found_paths = []

print(f"Analyzing log file: {log_file_path}...")

try:

with open(log_file_path, 'r') as f:

for line in f:

match = log_pattern.search(line)

if match:

path = match.group(1)

not_found_paths.append(path)

except IOError as e:

print(f"Error reading file: {e}")

return

if not not_found_paths:

print("No 404 errors found. Good job!")

return

print("\n--- 404 Error Report ---")

path_counts = Counter(not_found_paths)

# Print the most common 404 errors

for path, count in path_counts.most_common(10):

print(f"Count: {count: <5} | Path: {path}")

if __name__ == "__main__":

# On a typical Linux system, the log might be here. Adjust as needed.

nginx_log = '/var/log/nginx/access.log'

analyze_nginx_logs(nginx_log)Infrastructure Automation and Configuration Management

Python Automation extends far beyond local scripts. It plays a pivotal role in managing fleets of servers and ensuring consistent configurations. While tools like Ansible (which is itself written in Python) are dominant in this space, Python libraries provide a more programmatic and flexible way to handle remote execution and deployments.

Managing Remote Servers with Fabric

Fabric is a high-level Python library designed to streamline the use of Linux SSH for application deployment and systems administration tasks. It provides a clean, Pythonic API for executing shell commands on remote servers, transferring files, and handling prompts. This makes it an excellent tool for creating repeatable deployment scripts and automating routine maintenance across your infrastructure, whether it’s running on CentOS, Debian Linux, or another distribution.

Practical Example: Deploying an Application Update

Let’s create a simple `fabfile.py` to automate the deployment of a web application. This script defines a series of tasks: pulling the latest code from a Git repository, installing Python dependencies with `pip`, and restarting the application service using `systemd`. This entire workflow can be triggered with a single command from your local Linux Terminal.

from fabric import Connection, task

# Define the server you want to connect to.

# In a real-world scenario, you might load this from a config file.

HOST = "user@your-server-ip"

@task

def deploy(c):

"""

Connects to the server and deploys the latest version of the app.

"""

print("Connecting to the server...")

with Connection(HOST) as conn:

print("--- Starting Deployment ---")

# 1. Navigate to the application directory

app_dir = "/srv/my-web-app"

with conn.cd(app_dir):

print("1. Pulling latest changes from git...")

conn.run("git pull origin main")

print("2. Installing/updating Python dependencies...")

# Assuming a virtual environment is used

pip_path = "/srv/venvs/my-web-app/bin/pip"

requirements_path = "requirements.txt"

conn.run(f"{pip_path} install -r {requirements_path}")

# 3. Restart the application service to apply changes

print("3. Restarting the application service...")

service_name = "my-web-app.service"

conn.sudo(f"systemctl restart {service_name}")

# 4. Check the status to confirm it's running

print("4. Verifying service status...")

result = conn.sudo(f"systemctl status {service_name}")

if result.ok:

print("--- Deployment Successful! ---")

else:

print("--- Deployment Failed! Check service status on the server. ---")

# To run this from your terminal:

# > fab deployMastering Cloud and Container Orchestration with Python

The rise of the Linux Cloud and containerization has created new frontiers for automation. Python is a first-class citizen in this ecosystem, with powerful SDKs for interacting with cloud providers and container engines. This enables DevOps engineers to practice Infrastructure as Code (IaC) with the full power of a general-purpose programming language.

Automating the Cloud with Boto3

For anyone working with AWS Linux environments, `boto3` is the essential AWS SDK for Python. It allows you to create, configure, and manage AWS services directly from your Python scripts. You can automate everything from spinning up EC2 instances and configuring security groups to managing S3 buckets for Linux Backup strategies. This is a core component of modern Linux DevOps on the cloud.

Practical Example: S3 Bucket Health Check

This script uses `boto3` to perform a health check on your S3 buckets. It lists all buckets and then checks for common misconfigurations, such as buckets that are publicly accessible or lack versioning, which is crucial for data protection and recovery. This kind of proactive System Monitoring script can be scheduled to run periodically to enforce Linux Security best practices.

import boto3

from botocore.exceptions import ClientError

def check_s3_bucket_security():

"""

Connects to AWS using boto3 to check S3 buckets for public access

and versioning status.

"""

try:

s3_client = boto3.client('s3')

response = s3_client.list_buckets()

except ClientError as e:

print(f"Failed to connect to AWS. Check your credentials. Error: {e}")

return

print("--- S3 Bucket Security Report ---")

for bucket in response['Buckets']:

bucket_name = bucket['Name']

print(f"\nChecking Bucket: {bucket_name}")

# 1. Check for public access blocks

try:

pab_status = s3_client.get_public_access_block(Bucket=bucket_name)

config = pab_status['PublicAccessBlockConfiguration']

is_public = not (config['BlockPublicAcls'] and config['BlockPublicPolicy'] and

config['IgnorePublicAcls'] and config['RestrictPublicBuckets'])

if is_public:

print(" [WARNING] Public access is not fully blocked.")

else:

print(" [OK] Public access is blocked.")

except ClientError as e:

if e.response['Error']['Code'] == 'NoSuchPublicAccessBlockConfiguration':

print(" [WARNING] No Public Access Block configuration found. Bucket could be public.")

else:

print(f" [ERROR] Could not get public access block status: {e}")

# 2. Check for versioning

try:

versioning_status = s3_client.get_bucket_versioning(Bucket=bucket_name)

status = versioning_status.get('Status', 'NotEnabled')

if status == 'Enabled':

print(" [OK] Versioning is enabled.")

else:

print(" [INFO] Versioning is not enabled.")

except ClientError as e:

print(f" [ERROR] Could not get versioning status: {e}")

if __name__ == "__main__":

check_s3_bucket_security()Interacting with Docker and Kubernetes APIs

Python also excels at managing containerized workloads. The `docker` library provides a Pythonic interface to the Docker Engine API, allowing you to automate Linux Docker container lifecycle management. Similarly, the `kubernetes` client library lets you interact with your Kubernetes Linux cluster to deploy applications, manage resources, and monitor the health of your services, making it an indispensable tool for managing Container Linux environments.

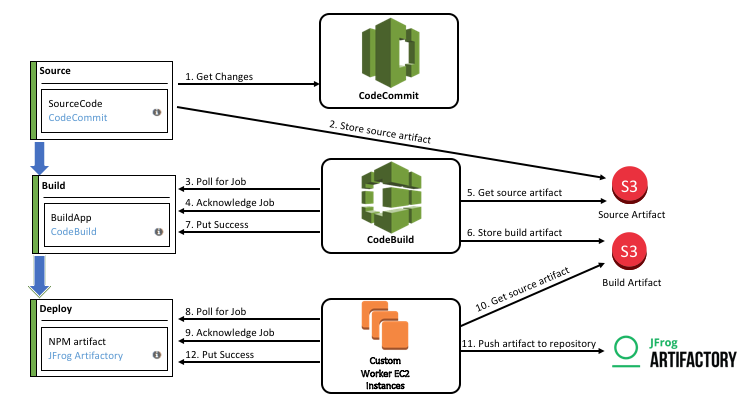

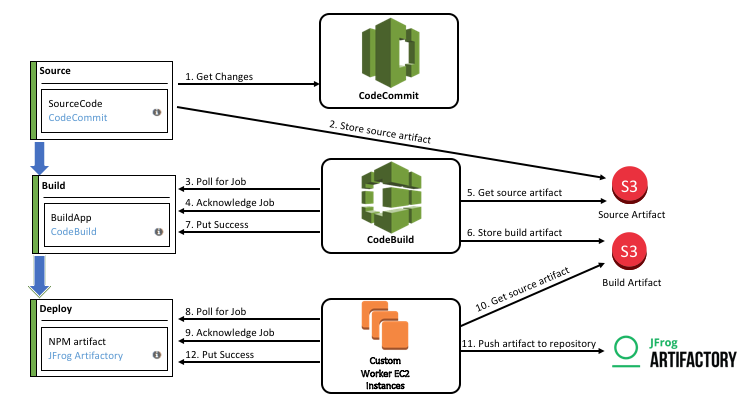

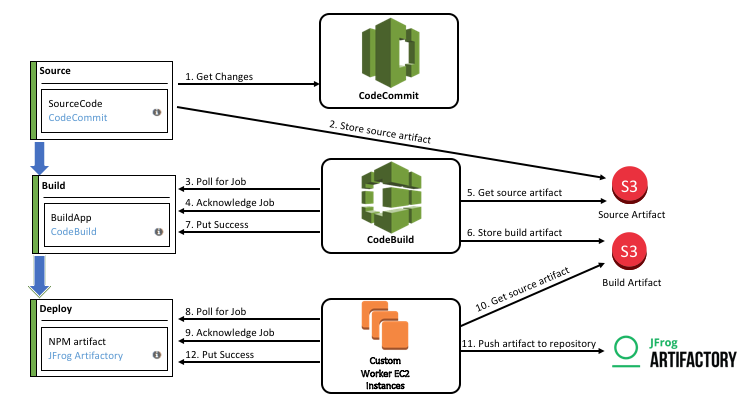

Integrating Python into CI/CD and Best Practices

Python scripts serve as the glue in modern CI/CD pipelines. They can be executed as steps in tools like Jenkins, GitLab CI, or GitHub Actions to perform a wide range of tasks, from running tests and building artifacts to triggering deployments and sending notifications.

Practical Example: A Simple Health Check API

DevOps engineers often build small tools and services to support their infrastructure. Here is a minimal web application using the Flask framework that exposes a `/health` endpoint. This API could be used by a load balancer or a Kubernetes Linux liveness probe to verify that the application is running and can connect to its database. This is a great example of using Python Linux for building operational tooling.

from flask import Flask, jsonify

import psycopg2 # Example for PostgreSQL

import os

app = Flask(__name__)

def check_database_connection():

"""Checks if a connection to the database can be established."""

try:

# Best practice: use environment variables for credentials

conn_str = os.getenv("DATABASE_URL")

conn = psycopg2.connect(conn_str)

conn.close()

return True, "Database connection successful."

except Exception as e:

# In a real app, log the error properly

return False, str(e)

@app.route('/health')

def health_check():

"""

Provides a health check endpoint for monitoring.

"""

db_ok, db_msg = check_database_connection()

status_code = 200 if db_ok else 503 # Service Unavailable

response = {

"status": "ok" if db_ok else "error",

"services": {

"database": {

"status": "healthy" if db_ok else "unhealthy",

"message": db_msg

}

}

}

return jsonify(response), status_code

if __name__ == '__main__':

# Run on port 8080, accessible from any IP

app.run(host='0.0.0.0', port=8080)Best Practices for Python DevOps Scripts

To ensure your automation scripts are reliable and maintainable, follow these best practices:

- Dependency Management: Always use a `requirements.txt` or `pyproject.toml` file to lock down dependencies. Isolate project environments using tools like `venv`.

- Configuration and Secrets: Never hardcode sensitive information like API keys or passwords. Use environment variables or a dedicated secrets management tool (e.g., HashiCorp Vault, AWS Secrets Manager).

- Robust Error Handling: Wrap external calls (APIs, file I/O, shell commands) in `try…except` blocks to handle failures gracefully.

- Logging: Implement structured logging to provide clear, machine-readable output. This is invaluable for debugging failed pipeline runs or automation scripts.

- Modularity and Testing: Break down large scripts into smaller, reusable functions. Write unit tests for your core logic to ensure it behaves as expected.

Conclusion

Python has firmly established itself as an indispensable language in the DevOps world. Its simplicity, power, and extensive ecosystem empower engineers to automate virtually every aspect of the software development lifecycle. From simple scripts that replace cumbersome shell commands to sophisticated orchestration of cloud-native infrastructure on AWS Linux or Azure Linux, Python provides a unified and elegant solution.

By mastering Python DevOps, you can build more reliable systems, accelerate delivery pipelines, and reduce manual toil. The key is to start small: identify a repetitive task in your daily workflow and automate it with a Python script. As you grow more comfortable, you can tackle more complex challenges like infrastructure management and CI/CD integration. Embracing Python is not just about writing code; it’s about adopting a mindset of continuous improvement and automation that is the very essence of DevOps.