In the world of software development, we often focus on the high-level applications that users interact with daily—websites, mobile apps, and data science models. But beneath this surface lies a foundational layer of software that makes it all possible: system programming. This is the discipline of writing code that manages and controls computer hardware, acting as the critical bridge between application software and the operating system. Moving a project from a simple prototype to a production-ready system requires a deep understanding of performance, reliability, and resource management—all core tenets of system programming. This is where the real engineering happens, transforming abstract ideas into robust, efficient, and scalable software that can handle the rigors of the real world.

This guide will take you on a deep dive into the world of system programming, specifically within the Linux ecosystem. We’ll explore core concepts, from managing processes and memory to inter-process communication and file I/O. We will provide practical code examples in C and Python, showcasing how these principles are applied in everything from simple scripts to complex applications like the Linux Kernel itself. Whether you’re an aspiring Linux Administration professional, a Linux DevOps engineer, or a developer looking to write more performant code, mastering these concepts is essential for building software that truly commands the machine.

The Building Blocks: Processes, Threads, and Memory Management

At the heart of any modern operating system is the concept of a process. A process is an instance of a running program, complete with its own memory space, file descriptors, and execution state. System programming gives you the power to create, manage, and terminate these processes, forming the basis for concurrency and multitasking. In Linux, this is primarily handled through a set of powerful system calls.

Understanding Processes in Linux

The most fundamental system call for process creation is fork(). When a program calls fork(), the operating system creates a nearly identical duplicate of the calling process, known as the child process. The original is the parent process. The child process inherits the parent’s memory space, file descriptors, and environment. The only significant difference is the return value of fork(): it returns 0 in the child process and the child’s process ID (PID) in the parent process. This allows you to write code that executes differently depending on whether it’s running in the parent or the child. Another key system call is exec(), which replaces the current process image with a new program. The combination of fork() and exec() is the standard way to launch new applications in Unix-like systems.

Here is a classic example in C demonstrating the use of fork(). You can compile this using GCC (gcc fork_example.c -o fork_example) on any Linux Distributions like Ubuntu Tutorial, Debian Linux, or Fedora Linux.

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/wait.h>

int main() {

pid_t pid = fork();

if (pid < 0) {

// Fork failed

fprintf(stderr, "Fork Failed");

return 1;

} else if (pid == 0) {

// This is the child process

printf("I am the child process! My PID is %d\n", getpid());

// Execute a new program (e.g., 'ls -l')

execlp("/bin/ls", "ls", "-l", NULL);

// execlp only returns if an error occurred

perror("execlp");

exit(1);

} else {

// This is the parent process

printf("I am the parent process! My PID is %d, and my child is %d\n", getpid(), pid);

// Wait for the child process to complete

int status;

waitpid(pid, &status, 0);

printf("Parent: Child process finished.\n");

}

return 0;

}Memory Management Essentials

In system-level languages like C, you are responsible for managing memory manually. When you need a block of memory on the heap, you request it with malloc(). When you’re done, you must explicitly release it with free(). Failing to do so results in memory leaks, where your program consumes more and more memory over time, eventually leading to performance degradation or a crash. Other common pitfalls include dangling pointers (a pointer that references a memory location that has been freed) and buffer overflows. Tools like Valgrind are indispensable for Linux Development, as they can detect memory leaks and invalid memory access, helping you write more robust code.

Enabling Communication: IPC and System Signals

In many real-world applications, isolated processes are not enough. You need them to communicate and coordinate with each other. This is where Inter-Process Communication (IPC) comes in. Additionally, the operating system needs a way to notify processes of important events, which it does through signals.

The Art of Inter-Process Communication (IPC)

IPC mechanisms allow different processes to share data and synchronize their actions. Some common IPC methods in Linux include:

- Pipes: A simple, unidirectional communication channel. One process writes to one end of the pipe, and another reads from the other.

- Sockets: A more versatile mechanism that allows communication between processes on the same machine or across a network. This is the foundation of Linux Networking.

- Shared Memory: The fastest IPC method, where two or more processes share a common block of memory. This requires careful synchronization to avoid race conditions.

Here’s a C code example demonstrating a simple pipe between a parent and child process. The parent writes a message to the pipe, and the child reads it.

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <string.h>

#define MSG_SIZE 256

int main() {

char in_buf[MSG_SIZE];

char *message = "Hello from the parent!";

int fd[2]; // File descriptors for the pipe: fd[0] is for reading, fd[1] is for writing

if (pipe(fd) == -1) {

perror("Pipe failed");

return 1;

}

pid_t pid = fork();

if (pid < 0) {

perror("Fork failed");

return 1;

}

if (pid == 0) {

// Child process: reads from the pipe

close(fd[1]); // Close the unused write end

read(fd[0], in_buf, MSG_SIZE);

printf("Child received: %s\n", in_buf);

close(fd[0]); // Close the read end

} else {

// Parent process: writes to the pipe

close(fd[0]); // Close the unused read end

write(fd[1], message, strlen(message) + 1);

close(fd[1]); // Close the write end

wait(NULL); // Wait for child to finish

}

return 0;

}Handling Signals: The OS’s Notification System

Signals are software interrupts sent to a process to notify it of an event. For example, when you press Ctrl+C in the Linux Terminal, the foreground process receives a SIGINT (Signal Interrupt) signal. By default, this terminates the process. However, a well-behaved program can “catch” this signal and execute a custom signal handler, allowing it to perform cleanup tasks like closing files or database connections before exiting gracefully. This is crucial for daemons running on a Linux Server, ensuring services like Apache or Nginx can shut down cleanly.

This Python Scripting example shows how to handle the SIGINT signal for a graceful shutdown.

import signal

import time

import sys

def graceful_shutdown(signum, frame):

"""Signal handler for SIGINT."""

print("\nCaught signal! Performing graceful shutdown...")

# Add cleanup code here (e.g., close database connections, save state)

print("Cleanup complete. Exiting.")

sys.exit(0)

# Register the signal handler for SIGINT (Ctrl+C)

signal.signal(signal.SIGINT, graceful_shutdown)

print("Program running... Press Ctrl+C to exit gracefully.")

# Main loop of the program

try:

while True:

print("Working...")

time.sleep(2)

except KeyboardInterrupt:

# This block is technically not needed with the signal handler,

# but it's good practice to have it as a fallback.

passInteracting with the World: File I/O and the File System

One of the most elegant and powerful concepts in Linux and Unix-like systems is the “everything is a file” philosophy. This means that not just regular files and directories, but also devices, sockets, and pipes are all represented as entries in the Linux File System and can be manipulated using the same set of system calls for file I/O.

The “Everything is a File” Philosophy

Every open file in a process is associated with a non-negative integer called a file descriptor. By convention, every process starts with three open file descriptors: 0 for standard input (stdin), 1 for standard output (stdout), and 2 for standard error (stderr). The core system calls for I/O are open(), read(), write(), and close(). Understanding these allows you to perform powerful operations, like redirecting the output of one program to the input of another, which is the foundation of Shell Scripting.

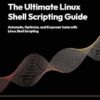

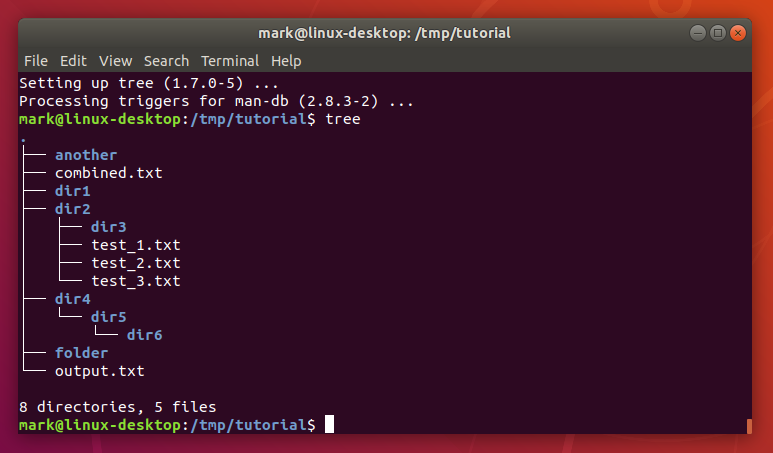

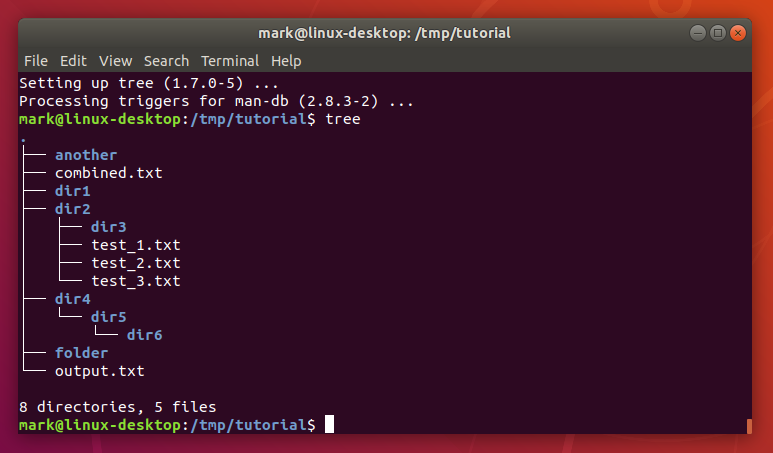

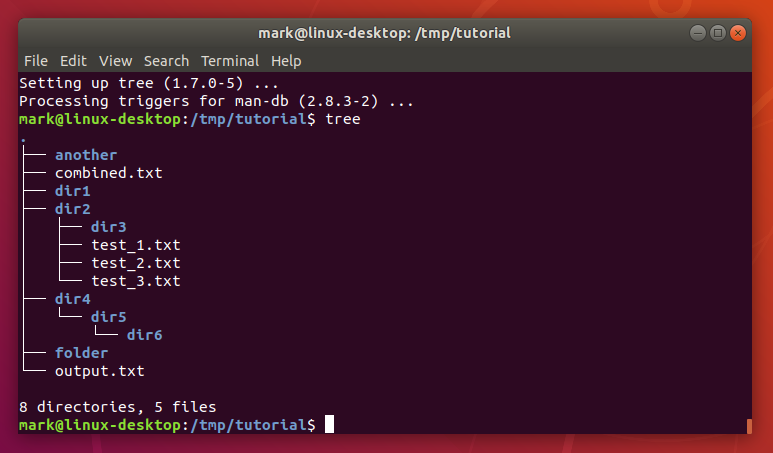

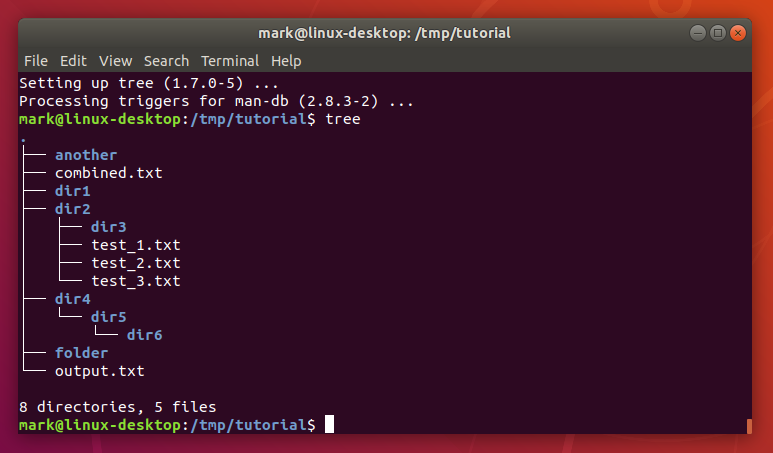

Practical File System Operations

Beyond reading and writing data, system programming often involves interacting with file metadata—information like file size, ownership, File Permissions, and modification times. The stat() family of system calls provides access to this information. This is extremely useful for System Administration tasks, such as monitoring disk usage or enforcing security policies.

This Python Automation script uses the os module (a Pythonic wrapper around system calls) to recursively scan a directory and report on the total size and number of files. This is a common task for any Python System Admin.

import os

import sys

def analyze_directory(path):

"""

Recursively walks a directory to calculate total size and file count.

"""

if not os.path.isdir(path):

print(f"Error: '{path}' is not a valid directory.")

return

total_size = 0

total_files = 0

print(f"Analyzing directory: {path}")

for dirpath, dirnames, filenames in os.walk(path):

for f in filenames:

fp = os.path.join(dirpath, f)

# Skip if it's a symlink to avoid errors

if not os.path.islink(fp):

try:

total_size += os.path.getsize(fp)

total_files += 1

except OSError as e:

print(f"Could not access {fp}: {e}")

# Convert size to a more readable format

size_mb = total_size / (1024 * 1024)

print("-" * 30)

print(f"Analysis Complete:")

print(f"Total Files: {total_files}")

print(f"Total Size: {size_mb:.2f} MB")

print("-" * 30)

if __name__ == "__main__":

if len(sys.argv) != 2:

print(f"Usage: python {sys.argv[0]} <directory_path>")

sys.exit(1)

directory_to_scan = sys.argv[1]

analyze_directory(directory_to_scan)System Programming for the Modern Stack: DevOps and Cloud

While the core principles of system programming are decades old, they are more relevant than ever in the age of cloud computing, containers, and DevOps. The tools and platforms that power modern infrastructure are built directly on these low-level concepts.

Scripting for System Administration and Automation

Much of modern System Administration is about automation. Bash Scripting and Python Scripting are the go-to tools for automating tasks like setting up a Linux Web Server, configuring a Linux Firewall with iptables, managing Linux Users, or performing a Linux Backup. A deep understanding of processes, file I/O, and signals allows you to write more robust and efficient automation scripts. For more complex configurations, tools like Ansible build on these fundamentals to manage entire fleets of servers, whether on-premise or in the cloud on AWS Linux or Azure Linux.

Here’s a simple Bash Scripting example that monitors disk usage and could be used as part of a larger System Monitoring solution.

#!/bin/bash

# A simple script to monitor disk usage.

# Set the threshold (e.g., 90%)

THRESHOLD=90

# The filesystem to check (e.g., /)

FILESYSTEM="/"

# Get the current usage percentage

CURRENT_USAGE=$(df ${FILESYSTEM} | grep ${FILESYSTEM} | awk '{ print $5 }' | sed 's/%//g')

echo "Current usage of ${FILESYSTEM} is ${CURRENT_USAGE}%"

if [ "$CURRENT_USAGE" -gt "$THRESHOLD" ]; then

# Send an alert (e.g., email, Slack notification)

echo "ALERT: Disk usage on ${FILESYSTEM} has exceeded ${THRESHOLD}%!"

# mail -s 'Disk Space Alert' admin@example.com << EOF

# The disk usage on server $(hostname) is at ${CURRENT_USAGE}%.

# EOF

else

echo "Disk usage is within acceptable limits."

fiContainers and Virtualization

The container revolution, led by Linux Docker and orchestrated by Kubernetes Linux, is built entirely on system programming concepts. Containers are not magic; they are regular Linux processes isolated from each other using kernel features called namespaces (which isolate PIDs, network stacks, etc.) and cgroups (which limit resource usage like CPU and memory). Understanding how these work at a low level is invaluable for debugging complex issues in a Container Linux environment and optimizing performance for applications like a PostgreSQL Linux database running in a container.

Performance Monitoring and Tooling

When an application is slow, how do you find the bottleneck? Tools like the top command, htop, and strace are essential for Performance Monitoring. htop gives you a real-time view of processes, while strace lets you trace the system calls a process is making. An understanding of system programming allows you to interpret the output of these tools effectively. If strace shows a process is constantly making read() calls on a slow disk, you’ve found your I/O bottleneck. This level of insight is what separates a good developer from a great one.

Conclusion

System programming is the bedrock upon which all other software is built. From the operating system kernel that boots your machine to the container runtime that powers your cloud-native applications, its principles are everywhere. By learning to manage processes, handle memory, facilitate inter-process communication, and manipulate the file system, you gain a fundamental understanding of how computers actually work. This knowledge empowers you to write more efficient, reliable, and secure code, and to diagnose and solve complex performance problems that are opaque to those who only operate at a higher level of abstraction.

The journey into system programming is a challenging but incredibly rewarding one. The next time you use a Linux Terminal, compile code with GCC, or deploy a Docker container, remember the intricate dance of system calls and processes happening just beneath the surface. Start by exploring the man pages for system calls like fork() and pipe(), experiment with the code examples, and begin building your own small system utilities. By mastering the machine, you will become a more capable and versatile engineer, ready to build the next generation of robust software systems.