The landscape of Linux Distributions is vast, complex, and fundamentally essential to the modern internet infrastructure. From the lightweight containers powering microservices in the cloud to the robust enterprise servers handling global financial transactions, Linux is the bedrock of modern computing. However, “Linux” itself is merely a kernel—the core interface between hardware and software. What system administrators and developers interact with daily is a distribution (distro): a cohesive collection of the Linux Kernel, system utilities, package managers, and configuration defaults.

Understanding the nuances between Debian Linux, Red Hat Linux, Arch Linux, and others is not just a matter of preference; it is a critical skill for effective System Administration and Linux DevOps. As the ecosystem has matured, we have seen a convergence toward standardized initialization systems, specifically systemd, which has transformed how services are managed across Ubuntu, CentOS, and Fedora Linux. This standardization facilitates better automation, logging, and dependency management.

This comprehensive guide explores the architecture of modern Linux systems, focusing on service management, automation via Python Scripting and Bash Scripting, and security best practices. We will delve into the technical depths of how these distributions operate, how to manage them at scale, and how to leverage tools like Docker and Ansible to maintain infrastructure integrity.

Section 1: Core Architecture and Service Management

At the heart of every distribution lies the boot process and service management. Historically, Linux used SysVinit scripts, which were simple shell scripts. However, modern Linux Distributions have largely adopted systemd. This shift was driven by the need for parallel boot processes, on-demand socket activation, and robust dependency handling. Systemd is not just an init system; it is a suite of tools that manages the system time, logging (journald), and network configurations.

Understanding Units and Services

In a modern Linux Server environment, whether it is running an Apache web server or a PostgreSQL Linux database, the application is managed as a systemd “unit.” Understanding how to write and manipulate these unit files is essential for Linux Administration. Unlike the “fire and forget” nature of old scripts, systemd monitors the process, restarts it upon failure, and manages its resources using cgroups (control groups).

Below is a practical example of creating a custom systemd service file for a Python Linux application. This ensures your application starts automatically on boot and restarts if it crashes—a critical requirement for production environments.

[Unit]

Description=High Performance Python Web Service

After=network.target postgresql.service

Wants=postgresql.service

[Service]

Type=simple

User=www-data

Group=www-data

# Path to the application and the virtual environment python executable

ExecStart=/var/www/myapp/venv/bin/python /var/www/myapp/server.py

# restart the service automatically if it fails

Restart=on-failure

# Wait 5 seconds before restarting

RestartSec=5s

# Standard Output logging handled by journald

StandardOutput=journal

StandardError=journal

# Security hardening: Prevent the service from modifying the file system

ProtectSystem=full

# Environment variables

Environment=FLASK_ENV=production

Environment=PORT=8080

[Install]

WantedBy=multi-user.targetTo implement this, an administrator would place the file in /etc/systemd/system/myapp.service, run systemctl daemon-reload, and then enable it. This highlights the power of modern distributions: standardized commands to manage complex lifecycles across different flavors like Ubuntu Tutorial guides or Red Hat Linux documentation might suggest.

Section 2: Automation and Package Management

While the kernel and init systems provide the foundation, the package manager defines the user experience and the “personality” of the distribution. Debian Linux and its derivatives use APT (Advanced Package Tool) with .deb files, known for stability. Red Hat Linux, CentOS, and Fedora Linux utilize RPM and DNF/YUM, focusing on enterprise standards. Arch Linux uses Pacman, favoring a rolling release model that provides the absolute latest software.

Scripting Across Distributions

For a Linux DevOps engineer, managing these differences manually is inefficient. This is where Python Automation and Shell Scripting become indispensable. Instead of memorizing flags for every package manager, you can write abstraction scripts to handle system updates, Linux Backup routines, or System Monitoring tasks.

The following Python System Admin script demonstrates how to detect the underlying distribution and apply updates programmatically. This utilizes the subprocess module to interact with the Linux Terminal commands and the platform library to identify the OS.

import platform

import subprocess

import sys

import logging

# Configure logging for system monitoring

logging.basicConfig(

filename='/var/log/sys_update.log',

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

def get_distro_manager():

"""Identify the Linux Distribution and return the package manager command."""

try:

# Check specific distro ID

distro_id = platform.freedesktop_os_release().get('ID')

except (OSError, AttributeError):

# Fallback for older python versions or non-standard distros

distro_id = "unknown"

if distro_id in ['debian', 'ubuntu', 'linuxmint']:

return ['apt-get', 'update'], ['apt-get', 'upgrade', '-y']

elif distro_id in ['fedora', 'centos', 'rhel', 'almalinux']:

return ['dnf', 'check-update'], ['dnf', 'upgrade', '-y']

elif distro_id in ['arch', 'manjaro']:

return ['pacman', '-Sy'], ['pacman', '-Syu', '--noconfirm']

else:

return None, None

def run_update():

update_cmd, upgrade_cmd = get_distro_manager()

if not update_cmd:

logging.error("Unsupported Linux Distribution")

sys.exit(1)

logging.info(f"Starting update process for {platform.system()}...")

try:

# Refresh repositories

subprocess.run(update_cmd, check=True, stdout=subprocess.PIPE)

logging.info("Repositories refreshed.")

# Perform upgrade

result = subprocess.run(upgrade_cmd, check=True, capture_output=True, text=True)

logging.info(f"System Upgraded Successfully: {result.stdout[:100]}...")

print("System updated successfully.")

except subprocess.CalledProcessError as e:

logging.error(f"Update failed: {e}")

print(f"Error during update: {e}")

if __name__ == "__main__":

# Ensure script is run as root (Linux Permissions check)

if subprocess.run("id -u", shell=True, capture_output=True).stdout.decode().strip() != '0':

print("This script requires root privileges.")

sys.exit(1)

run_update()This script exemplifies Python Scripting for infrastructure management. It abstracts the complexity of the underlying Linux Tools, allowing an administrator to run a single script regardless of whether they are on an AWS Linux instance or a local Ubuntu VM.

Section 3: Advanced System Administration and Security

Beyond installation and updates, managing a Linux Server involves rigorous security protocols and resource management. Key areas include Linux Firewall configuration (using iptables or firewalld), Linux SSH hardening, and managing Linux Users and File Permissions.

Security and Permissions

Security on Linux is multi-layered. At the filesystem level, you have standard permissions (read, write, execute). However, enterprise distributions like RHEL enforce Mandatory Access Control (MAC) via SELinux. This restricts what processes can do, even if they are running as root. For example, an Apache web server might be prevented from writing to the /home directory by SELinux policies, adding a critical layer of defense against compromised services.

Furthermore, Linux Disk Management often involves LVM (Logical Volume Manager). LVM allows for flexible resizing of partitions and snapshots, which are crucial for Linux Backup strategies. Monitoring these resources is done via tools like the top command or the more visual htop.

Below is a Bash Scripting example that performs a basic security audit. It checks for users with UID 0 (root privileges), ensures the firewall is active, and monitors disk space usage, sending alerts if thresholds are breached.

#!/bin/bash

# Linux Security Audit and Monitoring Script

# Requires root privileges

LOG_FILE="/var/log/security_audit.log"

HOSTNAME=$(hostname)

echo "Starting Security Audit for $HOSTNAME at $(date)" > $LOG_FILE

# 1. Check for non-root users with UID 0 (Security Risk)

echo "[*] Checking for unauthorized root users..." >> $LOG_FILE

grep 'x:0:' /etc/passwd | grep -v 'root' >> $LOG_FILE

if [ $? -eq 0 ]; then

echo "WARNING: Non-root users with UID 0 found!"

else

echo "OK: No unauthorized root users found." >> $LOG_FILE

fi

# 2. Check Firewall Status (UFW or Firewalld)

echo "[*] Checking Firewall Status..." >> $LOG_FILE

if command -v ufw >/dev/null; then

ufw status | grep "Status: active" >> $LOG_FILE

elif command -v firewall-cmd >/dev/null; then

firewall-cmd --state >> $LOG_FILE

else

echo "WARNING: No known firewall manager found active." >> $LOG_FILE

fi

# 3. Linux Disk Management Monitor

# Alert if disk usage is above 85%

echo "[*] Checking Disk Usage..." >> $LOG_FILE

df -H | grep -vE '^Filesystem|tmpfs|cdrom' | awk '{ print $5 " " $1 }' | while read output;

do

usep=$(echo $output | awk '{ print $1}' | cut -d'%' -f1 )

partition=$(echo $output | awk '{ print $2 }' )

if [ $usep -ge 85 ]; then

echo "CRITICAL: Running out of space \"$partition ($usep%)\" on $(hostname)" >> $LOG_FILE

# In a real scenario, you might pipe this to mail command

fi

done

# 4. Check Failed SSH Logins

echo "[*] Recent Failed SSH Attempts..." >> $LOG_FILE

if [ -f /var/log/auth.log ]; then

grep "Failed password" /var/log/auth.log | tail -n 5 >> $LOG_FILE

elif [ -f /var/log/secure ]; then

grep "Failed password" /var/log/secure | tail -n 5 >> $LOG_FILE

fi

echo "Audit Complete." >> $LOG_FILESection 4: DevOps, Containers, and Modern Workflows

The role of the Linux distribution has evolved significantly with the rise of Linux Docker and Kubernetes Linux environments. In a containerized world, the host distribution (often called Container Linux or minimal OS) matters less than the kernel version and the container runtime. However, understanding how to interact with these containers using Python DevOps tools is vital.

Automating Container Management

Tools like Ansible allow for agentless configuration management, but sometimes direct interaction with the Docker daemon via Python is necessary for custom workflows. The Linux Development environment often requires spinning up databases like MySQL Linux or web servers like Nginx rapidly.

Here is a Python example using the Docker SDK. This script acts as a monitoring agent that checks for unhealthy containers and attempts to restart them—a common pattern in self-healing infrastructure.

import docker

import logging

import time

# Setup logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("DockerMonitor")

def monitor_containers():

"""

Connects to the local Docker socket.

Checks for containers in 'exited' or 'dead' state.

Attempts to restart them.

"""

try:

# Connect to Docker daemon on Linux

client = docker.from_env()

# List all containers (running and stopped)

containers = client.containers.list(all=True)

for container in containers:

logger.info(f"Checking container: {container.name} - Status: {container.status}")

# Check for unhealthy or exited containers

if container.status in ['exited', 'dead']:

logger.warning(f"Container {container.name} is down. Attempting restart...")

try:

container.restart()

logger.info(f"Container {container.name} restarted successfully.")

except Exception as e:

logger.error(f"Failed to restart {container.name}: {e}")

# Check health status if available (Docker Healthcheck)

elif 'Health' in container.attrs['State']:

health_status = container.attrs['State']['Health']['Status']

if health_status == 'unhealthy':

logger.warning(f"Container {container.name} is marked UNHEALTHY. Restarting...")

container.restart()

except docker.errors.DockerException as e:

logger.critical(f"Could not connect to Docker daemon: {e}")

logger.info("Ensure the script has permissions to access /var/run/docker.sock")

if __name__ == "__main__":

# Run as a continuous monitoring loop

while True:

monitor_containers()

time.sleep(60) # Check every minuteBest Practices and Optimization

To maintain a healthy Linux environment, regardless of whether you are using Azure Linux, AWS Linux, or a physical server, adhere to these best practices:

- Minimize the Attack Surface: Only install necessary packages. If you are running a Linux Web Server, you likely do not need X11 or GUI tools. Use minimal installs or Alpine Linux for containers.

- Regular Updates: Use the automation scripts provided above to keep the Linux Kernel and packages patched against vulnerabilities.

- Use SSH Keys: Disable password authentication for Linux SSH. Use SSH keys and tools like Fail2Ban to prevent brute-force attacks.

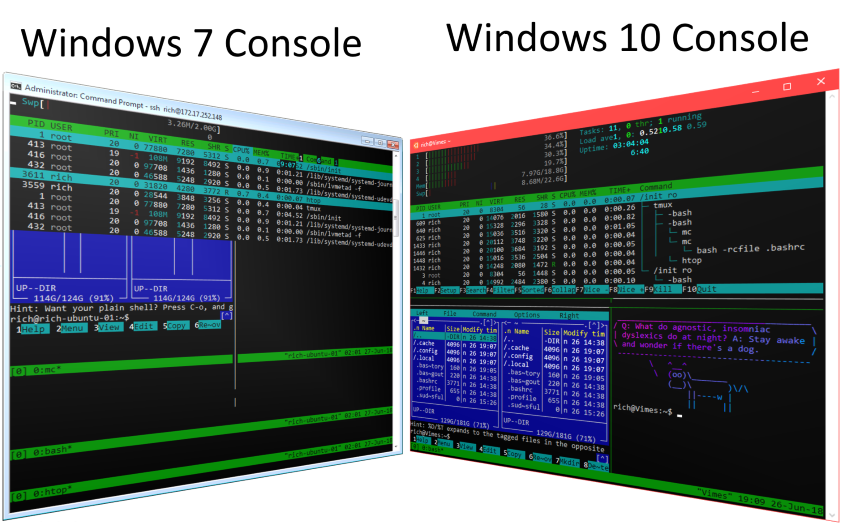

- Master the Command Line: Proficiency with Vim Editor, Tmux, or Screen allows you to work efficiently on remote servers over slow connections.

- Backup Configuration: Use version control (Git) for your

/etc/configuration files or use Ansible playbooks to define your infrastructure as code.

Conclusion

The world of Linux Distributions has matured into a sophisticated ecosystem where the boundaries between the OS, the application, and the cloud are increasingly blurred. From the foundational elements of the Linux Kernel and systemd to high-level Python Automation and Kubernetes Linux orchestration, the modern administrator must be a hybrid professional—part operator, part developer.

By mastering the core concepts of service management, embracing automation through scripting, and adhering to strict security protocols, you can harness the full power of Linux. Whether you are compiling C Programming Linux code with GCC or managing a fleet of microservices, the principles outlined in this guide provide the roadmap for building robust, scalable, and secure systems. Continue exploring, experimenting with different distributions, and automating your workflows to stay ahead in the rapidly evolving tech landscape.