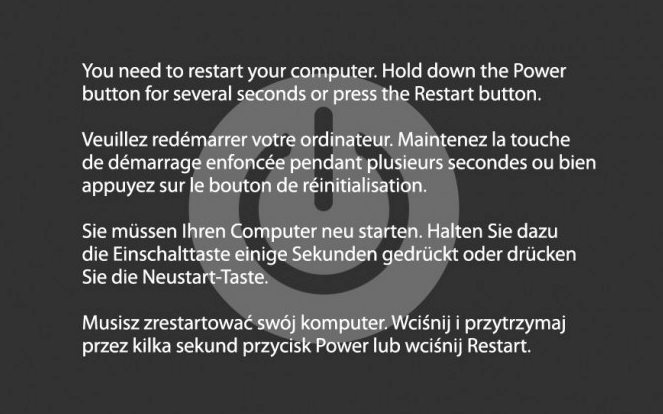

I spent my New Year’s Eve morning staring at a kernel panic. Not exactly how I planned to ring in 2026, but here we are.

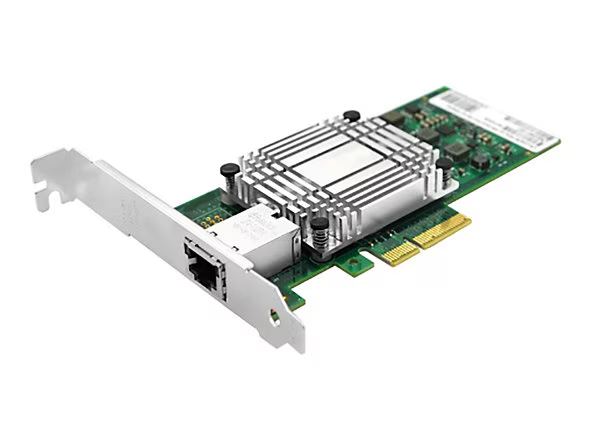

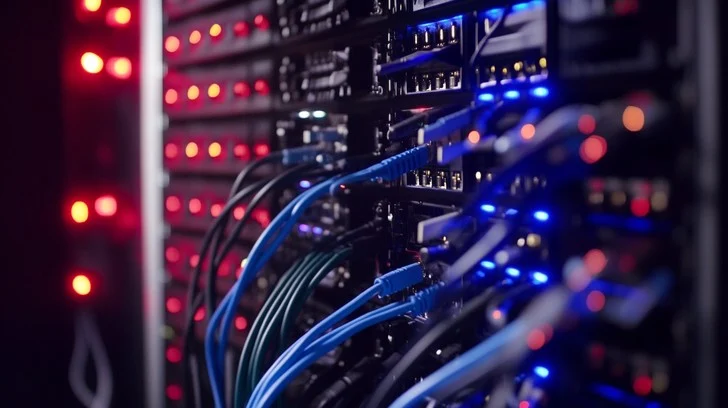

If you work with high-performance networking on Linux, you know the drill. You want 100Gbps or 200Gbps throughput. The CPU can’t handle that packet rate if it has to touch every single SKB (socket buffer). So, you turn to hardware offloading. You tell the NIC, “Hey, you handle these flows, don’t bother the OS unless it’s important.”

Sounds great. Marketing calls it “seamless acceleration.”

I call it a race condition minefield.

The “Switchdev” Promise

The modern Linux kernel handles smart NICs using the switchdev model. It’s actually a brilliant design, conceptually. It treats the switch ASIC on your network card just like any other Linux bridge. You use standard tools like iproute2 and tc (Traffic Control) to configure rules, and the driver translates those software rules into hardware tables.

But here’s the thing. The hardware has different “modes.”

Most enterprise NICs (like the Mellanox ConnectX series, which I use heavily) operate in either “Legacy” (NIC) mode or “Switchdev” mode. In NIC mode, it’s just a dumb pipe. In Switchdev mode, the internal “eswitch” (embedded switch) is exposed, and you can route traffic between virtual functions (VFs) purely in hardware.

The crash I was debugging? It happened right in that grey area where the driver thinks it’s doing one thing, but the hardware context is doing another.

Object Lifecycles Are Hard

The specific issue that ruined my morning involved the Traffic Control (TC) subsystem. When you offload a TC rule, the driver has to map the kernel’s flow representation to a hardware object ID. To do this, it maintains a mapping table.

Simple enough. Until you change modes.

When you switch a card from Switchdev mode back to NIC mode, a lot of internal structures get torn down. The eswitch mapping tables are freed. Pointers are nulled. Or at least, they should be.

But what happens if a delayed work queue or a lingering TC rule tries to access that mapping table after the mode switch has started but before the driver has fully cleaned up? Or worse, what if the code path checks for the wrong mode entirely?

You get a use-after-free. Or a null pointer dereference. The kernel oopses, your server reboots, and your boss asks why the “high availability” cluster just went dark.

Here is a simplified view of what the driver logic often looks like when it goes wrong. I’ve stripped this down to the logic flow, so don’t copy-paste this into your module unless you like kernel panics:

static int bad_driver_logic(struct my_priv *priv, u32 flow_id)

{

struct eswitch_mapping *map;

// MISTAKE: We assume that if the mapping pointer exists,

// we are in the right mode to use it.

if (!priv->eswitch->mapping_table) {

return -EINVAL;

}

// Meanwhile, another thread just changed the devlink mode to LEGACY

// and is about to free mapping_table...

mutex_lock(&priv->state_lock);

// If we don't check the mode HERE, we might access a table

// that is logically invalid for the current NIC mode.

map = lookup_flow(priv->eswitch->mapping_table, flow_id);

// ... boom.

mutex_unlock(&priv->state_lock);

return 0;

}The fix usually involves being paranoid. You can’t just check if a pointer is not null. You have to verify the context. Is the NIC actually in the mode that supports this map? If I’m in NIC mode, I shouldn’t be touching eswitch maps at all, even if the memory hasn’t been freed yet.

The “Flower” Classifier

We mostly interact with this via the flower classifier in TC. It’s the standard way to do flow-based offloading now. You match on 5-tuple (IPs, ports, protocol) and perform an action (drop, redirect, mirror).

I love flower because it’s readable. But under the hood, it generates a massive amount of state management for the driver. Every rule you add is a resource the hardware has to track.

When you run something like this:

tc filter add dev eth0 protocol ip parent ffff: \

flower skip_sw \

src_ip 192.168.1.5 \

action dropThe skip_sw flag is the gun you point at your own foot. It tells the kernel: “Do not process this in software. If the hardware can’t handle it, fail.”

If the driver has a bug in how it maps that rule to the firmware—specifically during a teardown event—you won’t just get an error. You might corrupt kernel memory. I saw a case recently where a patch fixed a logic error where the driver was trying to use an eswitch mapping while the device was clearly in NIC mode. It’s a classic state desynchronization.

Debugging This Mess

So how do you actually debug this without rebooting your test box forty times a day?

I’ve stopped relying solely on dmesg. By the time the oops hits the logs, the actual race condition happened microseconds ago.

bpftrace has saved my sanity more times than I can count this year. Instead of recompiling the kernel with printk debugging (which changes the timing and hides the race condition—Heisenbugs are real), I attach probes to the driver functions.

Here is a quick script I used to watch the mode changes on the NIC:

// watch_mode.bt

kprobe:mlx5_eswitch_enable

{

printf("Eswitch enabling: %s PID: %d\n", comm, pid);

}

kprobe:mlx5_eswitch_disable

{

printf("Eswitch disabling: %s PID: %d\n", comm, pid);

}

// Watch for the specific mapping function

kprobe:mlx5e_tc_add_flow

{

printf("Adding flow in process: %s\n", comm);

}Running this revealed the sequence. I could see the disable call happening, followed immediately by a tc_add_flow from a script I had forgotten was running in the background. The driver wasn’t locking the state correctly between those two events.

Why We Put Up With It

You might ask, “Why bother? Just buy more CPU cores.”

We can’t. With PCIe 5.0 and 6.0 pushing bandwidths that make DDR5 sweat, the CPU is the bottleneck. We need the NIC to handle the churn. We need the hardware to drop DDoS packets before they hit the PCIe bus. We need the switch ASIC to route VM traffic without a context switch.

The complexity of drivers like mlx5, i40e, or the newer exotic smartNIC drivers is the price of admission for modern datacenter performance. They are essentially operating systems running inside your operating system.

My advice? If you are writing automation that interacts with NIC offloads:

- Serialize your operations. Don’t try to change

devlinkmodes while spammingtcrules. - Trust nothing. Just because a command returned “Success” doesn’t mean the hardware is stable yet. Give it a beat.

- Update your kernel. Seriously. The stable trees (6.1, 6.6, etc.) get backports for these race conditions constantly. The fix for the mapping issue I mentioned landed in the stable tree pretty quickly after it was found.

Anyway, I think I’ve fixed the race in my module. Or at least, moved it to a window so small I won’t see it until next New Year’s Eve. Time to close the laptop.